|

Expert Systems |

| << Knowledge Representation and Reasoning |

| Handling uncertainty with fuzzy systems >> |

Artificial

Intelligence (CS607)

Unit

preference: prefer using a

clause with one literal.

Produces shorter

�

clauses

Set of

support: Try to involve the

thing you are trying to

prove. Chose a

�

resolution

involving the negated goal.

These are relevant clauses.

We

move

`towards solution'

Lecture No.

18-28

5 Expert

Systems

Expert

Systems (ES) are a popular

and useful application area in AI.

Having

studied

KRR, it is instructive to study ES to

see a practical manifestation of

the

principles

learnt there.

5.1 What is an

Expert?

Before we

attempt to define an expert

system, we have look at what

we take the

term

`expert' to mean when we

refer to human experts. Some

traits that

characterize

experts are:

They

possess specialized knowledge in a

certain area

�

They

possess experience in the

given area

�

They

can provide, upon

elicitation, an explanation of their

decisions

�

The

have a skill set that

enables them to translate

the specialized

�

knowledge

gained through experience

into solutions.

Try to

think of the various traits

you associate with experts you

might know, e.g.

skin

specialist, heart specialist,

car mechanic, architect,

software designer. You

will

see that the underlying

common factors are similar

to those outlined

above.

5.2 What is an

expert system?

According to

Durkin, an expert system is "A

computer program designed to

model

the

problem solving ability of a

human expert". With the

above discussion of

experts in

mind, the aspects of human

experts that expert systems

model are the

experts:

Knowledge

�

Reasoning

�

5.3

History and Evolution

Before we

begin to study development of

expert systems, let us get

some

historical

perspective about the

earliest practical AI systems.

After the so-called

dark

ages in AI, expert systems

were at the forefront of

rebirth of AI. There was

a

realization in

the late 60's that

the general framework of

problem solving was

not

111

Artificial

Intelligence (CS607)

enough to

solve all kinds of problem.

This was augmented by the

realization that

specialized

knowledge is a very important

component of practical

systems.

People

observed that systems that

were designed for

well-focused problems and

domains

out performed more `general'

systems. These observations

provided the

motivation

for expert systems. Expert

systems are important

historically as the

earliest AI

systems and the most

used systems practically. To

highlight the utility

of expert

systems, we will look at

some famous expert systems,

which served to

define

the paradigms for the

current expert

systems.

5.3.1 Dendral

(1960's)

Dendral

was one of the pioneering

expert systems. It was

developed at Stanford

for NASA to

perform chemical analysis of

Martian soil for space

missions. Given

mass

spectral data, the problem

was to determine molecular

structure. In the

laboratory,

the `generate and test'

method was used; possible

hypothesis about

molecular

structures were generated

and tested by matching to

actual data.

There

was an early realization

that experts use certain

heuristics to rule

out

certain

options when looking at

possible structures. It seemed

like a good idea to

encode

that knowledge in a software

system. The result was the

program

Dendral,

which gained a lot of

acclaim and most importantly

provided the

important

distinction that Durkin

describes as: `Intelligent

behavior is dependent,

not so

much on the methods of

reasoning, but on the

knowledge one has to

reason

with'.

5.3.2 MYCIN (mid

70s)

MYCIN was

developed at Stanford to aid

physicians in diagnosing and

treating

patients

with a particular blood

disease. The motivation for

building MYCIN was

that

there were few experts of

that disease, they also

had availability

constraints.

Immediate

expertise was often needed

because they were dealing

with a life-

threatening

condition. MYCIN was tested in

1982. Its diagnosis on ten

selected

cases

was obtained, along with

the diagnosis of a panel of

human experts.

MYCIN compositely

scored higher than human

experts!

MYCIN was an

important system in the

history of AI because it demonstrated

that

expert

systems could be used for

solving practical problems. It

was pioneering

work on

the structure of ES (separate

knowledge and control), as opposed

to

Dendral, MYCIN

used the same structure

that is now formalized for

expert

systems.

5.3.3

R1/XCON (late 70's)

R1/XCON is

also amongst the most

cited expert systems. It was

developed by

DEC

(Digital Equipment Corporation), as a

computer configuration assistant.

It

was

one of the most successful

expert systems in routine

use, bringing an

estimated

saving of $25million per

year to DEC. It is a classical

example of how

an ES can

increase productivity of organization, by

assisting existing

experts.

5.4 Comparison of

a human expert and an expert system

112

Artificial

Intelligence (CS607)

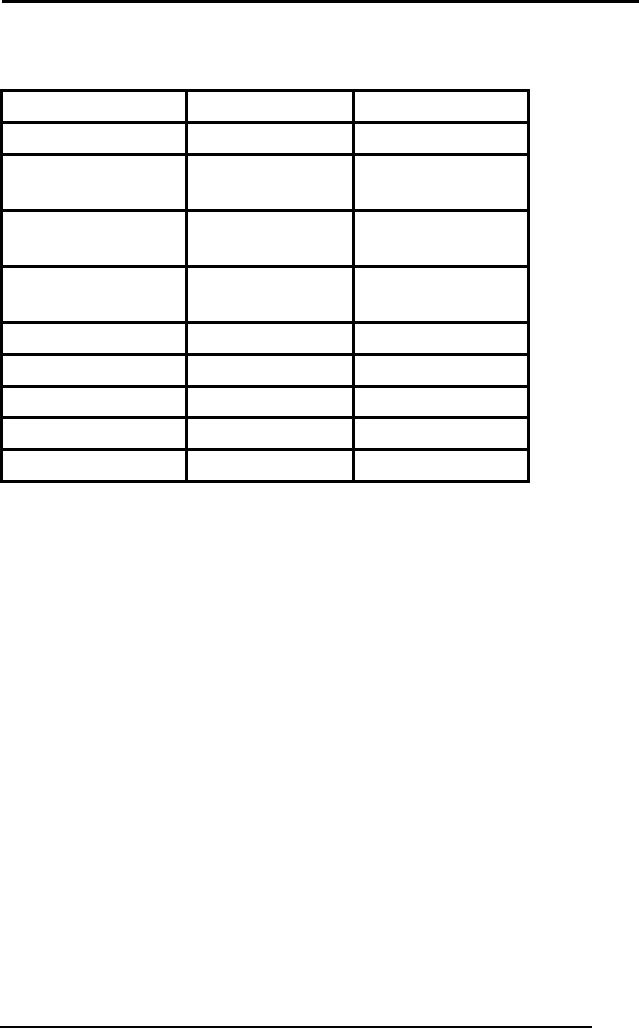

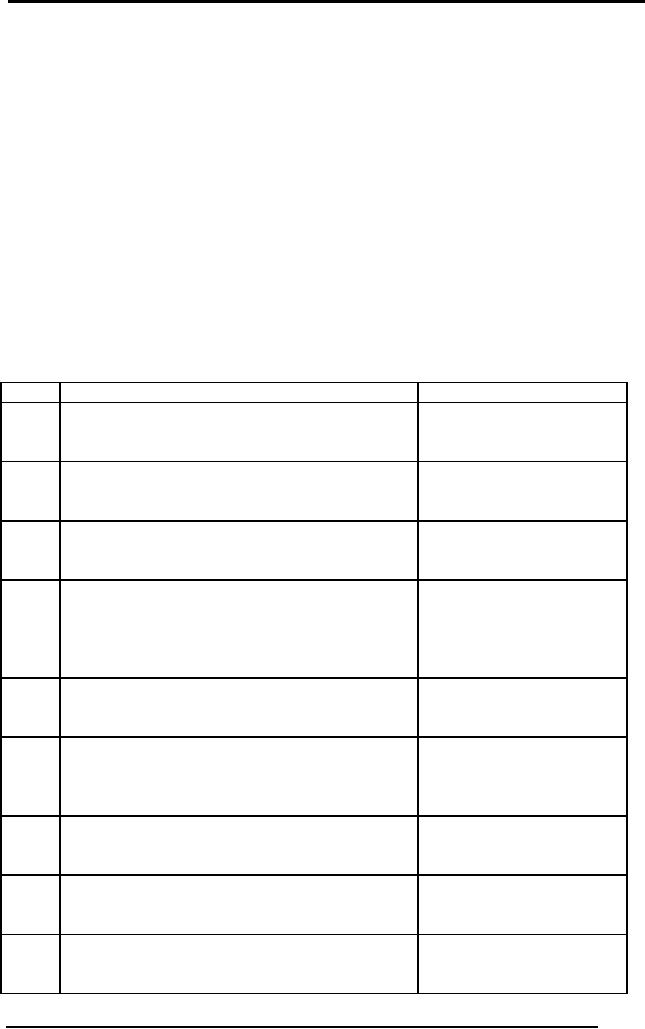

The following

table compares human experts

to expert systems. While

looking at

these,

consider some examples, e.g.

doctor, weather

expert.

Issues

Human

Expert

Expert

System

Availability

Limited

Always

Geographic

location

Locally

available

Anywhere

Safety

considerations

Irreplaceable

Can be

replaced

Durability

Depends

on

Non-perishable

individual

Performance

Variable

High

Speed

Variable

High

Cost

High

Low

Learning

Ability

Variable/High

Low

Explanation

Variable

Exact

5.5

Roles of an expert system

An expert

system may take two main

roles, relative to the human

expert. It may

replace

the expert or assist the

expert

Replacement of

expert

This

proposition raises many

eyebrows. It is not very

practical in some

situations,

but

feasible in others. Consider

drastic situations where

safety or location is an

issue,

e.g. a mission to Mars. In

such cases replacement of an

expert may be the

only

feasible option. Also, in

cases where an expert cannot

be available at a

particular

geographical location e.g.

volcanic areas, it is expedient to

use an

expert

system as a substitute.

An example of

this role is a France based

oil exploration company that

maintains

a number of

oil wells. They had a

problem that the drills

would occasionally

become

stuck. This typically occurs

when the drill hits

something that prevents

it

from

turning. Often delays due to

this problem cause huge

losses until an

expert

can

arrive at the scene to

investigate. The company decided to

deploy an expert

system so

solve the problem. A system

called `Drilling Advisor'

(Elf-Aquitane

1983)

was developed, which saved

the company from huge

losses that would be

incurred

otherwise.

Assisting

expert

113

Artificial

Intelligence (CS607)

Assisting an

expert is the most commonly

found role of an ES. The

goal is to aid

an expert in a

routine tasks to increase

productivity, or to aid in managing

a

complex

situation by using an expert

system that may itself draw

on experience

of other

(possibly more than one)

individuals. Such an expert

system helps an

expert

overcome shortcomings such as

recalling relevant

information.

XCON is an example

of how an ES can assist an

expert.

5.6 How

are expert systems used?

Expert

systems may be used in a

host of application areas

including diagnosis,

interpretation,

prescription, design, planning,

control, instruction, prediction

and

simulation.

Control

applications

In control

applications, ES are used to

adaptively govern/regulate the

behavior of

a system,

e.g. controlling a manufacturing

process, or medical treatment. The

ES

obtains

data about current system

state, reasons, predicts

future system states

and recommends

(or executes) adjustments

accordingly. An example of such

a

system is VM

(Fagan 1978). This ES is

used to monitor patient

status in the

intensive

care unit. It analyses heart

rate, blood pressure and

breathing

measurements to

adjust the ventilator being

used by the patient.

Design

ES are

used for design applications

to configure objects under

given design

constraints,

e.g. XCON. Such ES often

use non-monotonic reasoning,

because of

implications of

steps on previous steps.

Another example of a design ES

is

PEACE

(Dincbas 1980), which is a CAD

tool to assist in design of

electronic

structures.

Diagnosis and

Prescription

An ES can

serve to identify system

malfunction points. To do this it

must have

knowledge of

possible faults as well as

diagnosis methodology extracted

from

technical

experts, e.g. diagnosis

based on patient's symptoms,

diagnosing

malfunctioning

electronic structures. Most

diagnosis ES have a

prescription

subsystem.

Such systems are usually

interactive, building on user

information to

narrow

down diagnosis.

Instruction and

Simulation

ES may be

used to guide the

instruction of a student in some

topic. Tutoring

applications

include GUIDON (Clancey 1979),

which instructs students

in

diagnosis of

bacterial infections. Its

strategy is to present user

with cases (of

which it

has solution). It then

analyzes the student's

response. It compares

the

students

approach to its own and

directs student based on

differences.

114

Artificial

Intelligence (CS607)

Simulation

ES can be

used to model processes or

systems for operational

study, or for use

along

with tutoring

applications

Interpretation

According to

Durkin, interpretation is `Producing an

understanding of situation

from

given information'. An example of a

system that provides

interpretation is

FXAA (1988).

This ES provides financial

assistance for a commercial

bank. It

looks at a

large number of transactions and

identifies irregularities in

transaction

trends. It

also enables automated

audit.

Planning and

prediction

ES may be

used for planning

applications, e.g. recommending

steps for a robot

to carry

out certain steps, cash

management planning. SMARTPlan is

such a

system, a

strategic market planning

expert (Beeral, 1993). It

suggests

appropriate

marketing strategy required to

achieve economic success.

Similarly,

prediction

systems infer likely

consequences from a given

situation.

Appropriate domains for expert

systems

When

analyzing a particular domain to

see if an expert system may

be useful, the

system

analyst should ask the

following questions:

Can

the problem be effectively

solved by conventional programming?

If

�

not, an ES may be

the choice, because ES are

especially suited to

ill-

structured

problems.

Is the

domain well-bounded? e.g. a

headache diagnosis system

may

�

eventually

have to contain domain

knowledge of many areas of

medicine

because it is

not easy to limit diagnosis

to one area. In such cases

where

the

domain is too wide, building

an ES may be not be a feasible

proposition.

What

are the practical issues

involved? Is some human

expert willing to

�

cooperate? Is

the expert's knowledge

especially uncertain and

heuristic? If

so, ES

may be useful.

5.7 Expert system

structure

Having

discussed the scenarios and

applications in which expert

systems may be

useful,

let us delve into the

structure of expert systems. To

facilitate this, we

use

the

analogy of an expert (say a

doctor) solving a problem. The

expert has the

following:

Focused

area of expertise

�

Specialized

Knowledge (Long-term Memory,

LTM)

�

Case

facts (Short-term Memory,

STM)

�

Reasons

with these to form new

knowledge

�

115

Artificial

Intelligence (CS607)

Solves

the given problem

�

Now, we

are ready to define the

corresponding concepts in an Expert

System.

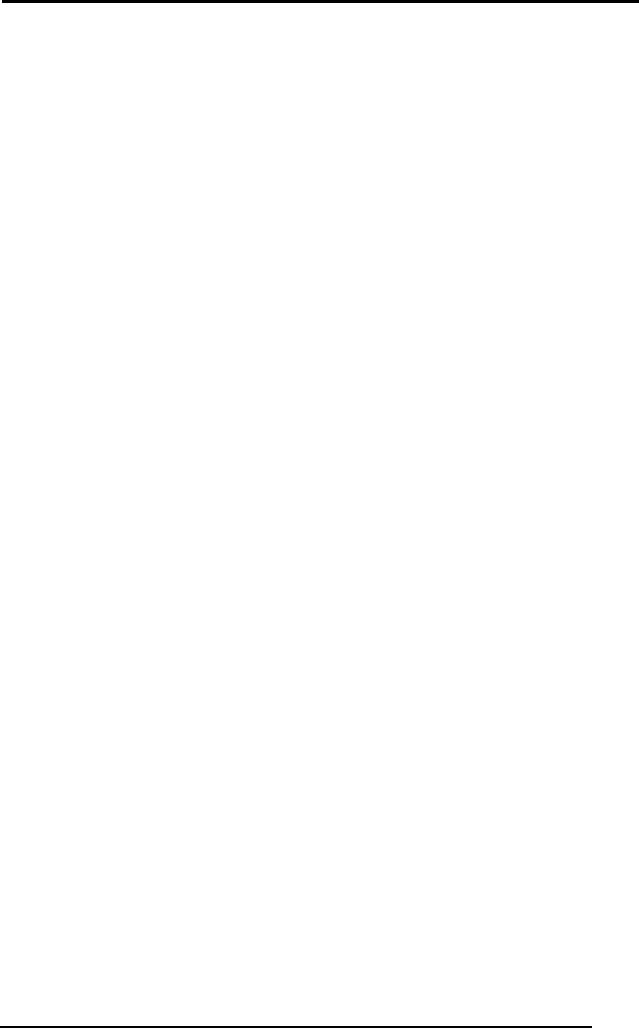

Human

Expert

Expert

System

Focused

Area of Expertise

Domain

Domain Knowledge (stored

in

Specialized

Knowledge (stored in

LTM)

Knowledge Base)

Case

Facts (stored in STM)

Case/Inferred

Facts (stored in

Working Memory)

Reasoning

Inference

Engine

Solution

Conclusions

We can

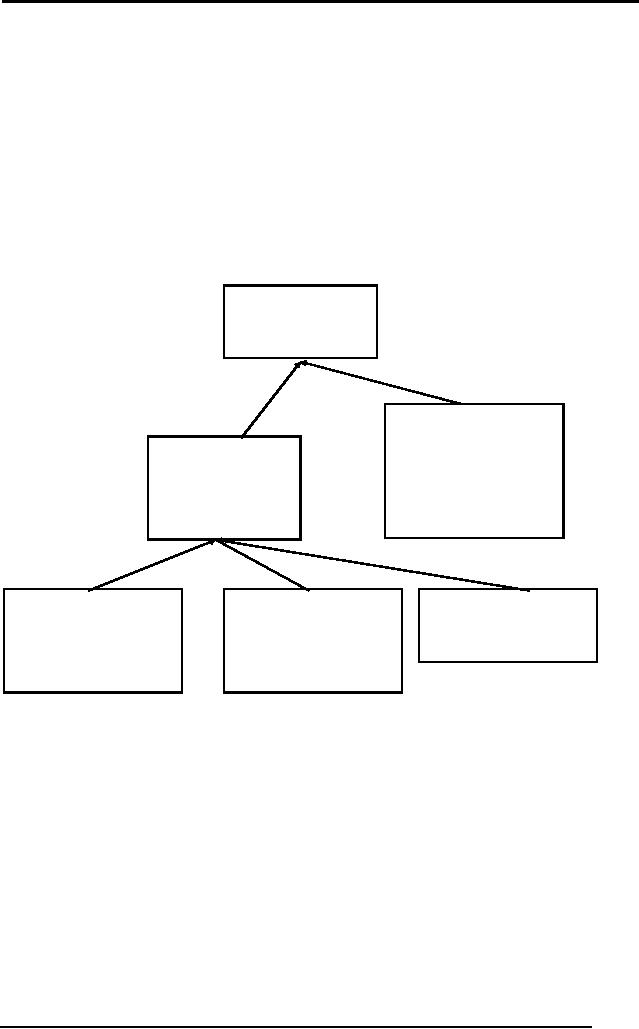

view the structure of the ES

and its components as shown in

the figure

below

Expert

System

Working

Memory

Analogy:

STM

-Initial Case

facts

-Inferred

facts

Inference

USER

Engine

Knowledge

Base

Analogy:

LTM

- Domain

knowledge

116

Artificial

Intelligence (CS607)

Figure

13: Expert System

Structure

5.7.1 Knowledge

Base

The knowledge

base is the part of an

expert system that contains

the domain

knowledge,

i.e.

Problem

facts, rules

�

Concepts

�

Relationships

�

As we have

emphasised several times,

the power of an ES lies to a

large extent

in its

richness of knowledge. Therefore, one of

the prime roles of the

expert

system

designer is to act as a knowledge

engineer. As a knowledge

engineer,

the

designer must overcome the

knowledge acquisition bottleneck and

find an

effective way to

get information from the

expert and encode it in the

knowledge

base,

using one of the knowledge

representation techniques we discussed

in

KRR.

As discussed in

the KRR section, one

way of encoding that

knowledge is in the

form of

IF-THEN rules. We saw that

such representation is especially

conducive

to

reasoning.

5.7.2 Working

memory

The working

memory is the `part of the

expert system that contains

the problem

facts

that are discovered during

the session' according to

Durkin. One session

in

the

working memory corresponds to

one consultation. During a

consultation:

User

presents some facts about

the situation.

�

These

are stored in the working

memory.

�

Using

these and the knowledge

stored in the knowledge

base, new

�

information is

inferred and also added to

the working memory.

5.7.3 Inference

Engine

The inference

engine can be viewed as the

processor in an expert system

that

matches

the facts contained in the

working memory with the

domain knowledge

contained in

the knowledge base, to draw

conclusions about the

problem. It

works

with the knowledge base and

the working memory, and

draws on both to

add new

facts to the working

memory.

If the

knowledge of an ES is represented in the

form of IF-THEN rules,

the

Inference

Engine has the following

strategy: Match given facts

in working

memory to

the premises of the rules in

the knowledge base, if match

found, `fire'

the

conclusion of the rule, i.e.

add the conclusion to the

working memory. Do

this

repeatedly,

while new facts can be

added, until you come up

with the desired

conclusion.

117

Artificial

Intelligence (CS607)

We will

illustrate the above

features using examples in

the following

sections

118

Artificial

Intelligence (CS607)

5.7.4 Expert

System Example: Family

Knowledge

Base

Working

Memory

Rule 1:

father

(M.Tariq, Ali)

IF father

(X, Y)

father

(M.Tariq, Ahmed)

AND father

(X, Z)

THEN brother

(Y, Z)

brother

(Ali, Ahmed)

Rule 2:

payTuition

(M.Tariq, Ali)

IF father

(X, Y)

payTuition

(M.Tariq,Ahmed)

THEN payTuition

(X, Y)

like

(Ali, Ahmed)

Rule 3:

IF brother

(X, Y)

THEN like

(X, Y)

Let's

look at the example above to

see how the knowledge

base and working

memory

are used by the inference

engine to add new facts to

the working

memory. The

knowledge base column on the

left contains the three

rules of the

system. The

working memory starts out

with two initial case

facts:

father

(M.Tariq, Ali)

father

(M.Tariq, Ahmed)

The inference

engine matches each rule in

turn with the rules in

the working

memory to

see if the premises are

all matched. Once all

premises are matched,

the

rule is fired and the

conclusion is added to the

working memory, e.g.

the

premises of

rule 1 match the initial

facts, therefore it fires

and the fact

brother(Ali,

Ahmed is

fired). This matching of

rule premises and facts

continues until no new

facts

can be added to the system.

The matching and firing is

indicated by arrows

in the

above table.

5.7.5 Expert

system example: raining

119

Artificial

Intelligence (CS607)

Working

Memory

Knowledge

Base

Rule

1:

person

(Ali)

IF

person(X)

person

(Ahmed)

AND

person(Y)

cloudy

()

AND

likes (X, Y)

likes(Ali,

Ahmed)

AND

sameSchool(X,Y)

sameSchool(Ali,

Ahmed)

THEN

weekend()

friends(X,

Y)

Rule

2:

IF friends

(X, Y)

friends(Ali,

Ahmed)

AND

weekend()

goToMovies(Ali)

THEN

goToMovies(Ahmed)

goToMovies(X)

carryUmbrella(Ali)

goToMovies(Y)

carryUmbrella(Ahmed)

Rule

3:

IF

goToMovies(X)

AND

cloudy()

THEN

carryUmbrella(X)

5.7.6 Explanation

facility

The explanation

facility is a module of an expert

system that allows

transparency

of operation, by

providing an explanation of how it

reached the conclusion. In

the

family

example above, how does

the expert system draw

the conclusion that

Ali

likes

Ahmed?

The answer to

this is the sequence of

reasoning steps as shown

with the arrows

in the

table below.

120

Artificial

Intelligence (CS607)

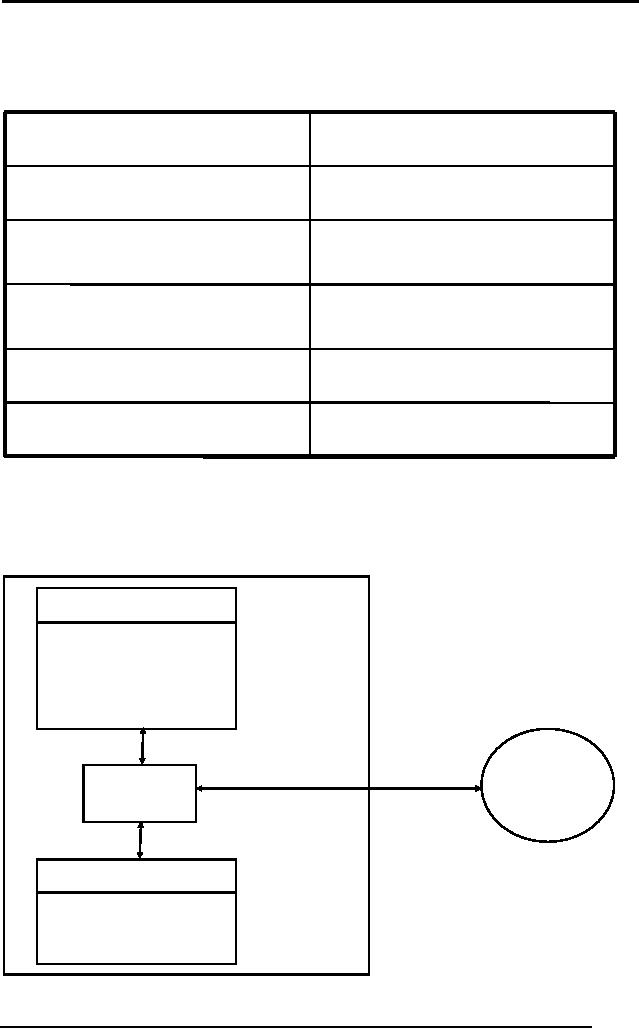

Working

Memory

Knowledge

Base

father

(M.Tariq, Ali)

Rule

1:

father

(M.Tariq, Ahmed)

IF father

(X, Y)

AND father

(X, Z)

brother

(Ali, Ahmed)

THEN

brother (Y, Z)

payTuition

(M.Tariq, Ali)

Rule

2:

payTuition

(M.Tariq,Ahmed)

IF father

(X, Y)

THEN

payTuition (X, Y)

like (Ali,

Ahmed)

Rule

3:

IF brother

(X, Y)

THEN

like (X, Y)

The arrows

above provide the

explanation for how the fact

like(Ali, Ahmed) was

added to

the working memory.

5.8

Characteristics of expert systems

Having

looked at the basic

operation of expert systems, we

can begin to outline

desirable

properties or characteristics we would

like our expert systems

to

possess.

ES have an

explanation facility. This is

the module of an expert

system that

allows

transparency of operation, by providing

an explanation of how

the

inference

engine reached the

conclusion. We want ES to have

this facility so that

users

can have knowledge of how it

reaches its

conclusion.

An expert

system is different from

conventional programs in the

sense that

program

control and knowledge are

separate. We can change one

while affecting

the

other minimally. This

separation is manifest in ES structure;

knowledge base,

working

memory and inference engine.

Separation of these components

allows

changes to

the knowledge to be independent of

changes in control and

vice

versa.

"There is a

clear separation of general

knowledge about the problem

(the rules

forming

the knowledge base) from

information about the

current problem (the

input

data) and methods for

applying the general

knowledge to a problem

(the

rule

interpreter).The program itself is

only an interpreter (or

general reasoning

121

Artificial

Intelligence (CS607)

mechanism) and

ideally the system can be

changed simply by adding

or

subtracting

rules in the knowledge base"

(Duda)

Besides

these properties, an expert

system also possesses expert

knowledge in

that it

embodies expertise of human

expert. If focuses expertise

because the

larger

the domain, the more

complex the expert system

becomes, e.g. a car

diagnosis

expert is more easily

handled if we make separate ES

components for

engine

problems, electricity problems,

etc. instead of just

designing one

component

for all problems.

We have

also seen that an ES reasons

heuristically, by encoding an

expert's

rules-of-thumb.

Lastly, an expert system,

like a human expert makes

mistakes,

but

that is tolerable if we can

get the expert system to

perform at least as well

as

the

human expert it is trying to

emulate.

5.9 Programming

vs. knowledge engineering

Conventional

programming is a sequential, three

step process: Design,

Code,

Debug.

Knowledge engineering, which is

the process of building an

expert

system,

also involves assessment,

knowledge acquisition, design,

testing,

documentation and

maintenance. However, there

are some key

differences

between

the two programming

paradigms.

Conventional

programming focuses on solution,

while ES programming

focuses

on problem. An ES

is designed on the philosophy

that if we have the

right

knowledge

base, the solution will be

derived from that data

using a generic

reasoning

mechanism.

Unlike

traditional programs, you

don't just program an ES and

consider it `built'. It

grows as you

add new knowledge. Once

framework is made, addition

of

knowledge

dictates growth of

ES.

5.10

People involved in an expert system

project

The main

people involved in an ES development

project are the domain

expert,

the

knowledge engineer and the

end user.

Domain

Expert

A domain

expert is `A person who

posses the skill and

knowledge to solve a

specific

problem in a manner superior to

others' (Durkin). For our

purposes, an

expert

should have expert knowledge

in the given domain, good

communication

skills,

availability and readiness to

co-operate.

Knowledge

Engineer

A knowledge

engineer is `a person who

designs, builds and tests an

Expert

System'

(Durkin). A knowledge engineer

plays a key role in identifying,

acquiring

and encoding

knowledge.

122

Artificial

Intelligence (CS607)

End-user

The end users

are the people who

will use the expert

system. Correctness,

usability

and clarity are important ES

features for an end

user.

5.11

Inference mechanisms

In the

examples we have looked at so

far, we have looked

informally at how the

inference

engine adds new facts to

the working memory. We can

see that many

different

sequences for matching are

possible and that we can

have multiple

strategies

for inferring new

information, depending upon

our goal. If we want

to

look

for a specific fact, it

makes no sense to add all

possible facts to the

working

memory. In

other cases, we might

actually need to know all

possible facts about

the

situation. Guided by this

intuition, we have two

formal inference

mechanisms;

forward and

backward chaining.

5.11.1

Forward

Chaining

Let's

look at how a doctor goes

about diagnosing a patient. He

asks the patient

for

symptoms and then infers

diagnosis from symptoms.

Forward chaining is

based on

the same idea. It is an

"inference strategy that

begins with a set of

known

facts, derives new facts

using rules whose premises

match the known

facts,

and continues this process

until a goal sate is reached

or until no further

rules

have premises that match

the known or derived facts"

(Durkin). As you will

come to

appreciate shortly, it is a data-driven

approach.

Approach

1. Add facts to

working memory (WM)

2. Take

each rule in turn and

check to see if any of its

premises match the

facts in

the WM

3. When

matches found for all

premises of a rule, place

the conclusion of the

rule in

WM.

4. Repeat

this process until no more

facts can be added. Each

repetition of

the

process is called a

pass.

We will

demonstrate forward chaining

using an example.

Doctor example

(forward chaining)

Rules

Rule

1

IF

The patient

has deep cough

AND

We suspect an

infection

THEN

The patient

has Pneumonia

Rule

2

IF

The patient's

temperature is above

100

THEN

Patient

has fever

123

Artificial

Intelligence (CS607)

Rule

3

IF

The patient

has been sick for

over a fortnight

AND

The patient

has a fever

THEN

We suspect an

infection

Case

facts

Patients

temperature= 103

�

Patient

has been sick for

over a month

�

Patient

has violent coughing

fits

�

First

Pass

Rule,

premise

Status

Working

Memory

1, 1

True

Temp=

103

Deep

cough

Sick

for a month

Coughing

fits

1, 2

Unknown

Temp=

103

Suspect

infection

Sick

for a month

Coughing

fits

2, 1

True,

fire rule

Temp=

103

Temperature>100

Sick

for a month

Coughing

fits

Patient

has fever

Second

Pass

Rule,

premise

Status

Working

Memory

1, 1

True

Temp=

103

Sick

for a month

Deep

cough

Coughing

fits

Patient

has fever

1, 2

Unknown

Temp=

103

Suspect

infection

Sick

for a month

Coughing

fits

Patient

has fever

3, 1

True

Temp=

103

Sick

for over fortnight

Sick

for a month

Coughing

fits

Patient

has fever

3, 2

True,

fire

Temp=

103

Patient

has fever

Sick

for a month

Coughing

fits

Patient

has fever

Infection

Third

Pass

124

Artificial

Intelligence (CS607)

Rule, premise

Status

Working

Memory

1, 1

True

Temp=

103

Sick

for a month

Deep

cough

Coughing

fits

Patient

has fever

Infection

1, 2

True,

fire

Temp=

103

Suspect

infection

Sick

for a month

Coughing

fits

Patient

has fever

Infection

Pneumonia

Now, no

more facts can be added to

the WM. Diagnosis: Patient

has Pneumonia.

Issues in forward

chaining

Undirected

search

There is an

important observation to be made

about forward chaining.

The

forward

chaining inference engine

infers all possible facts

from the given facts.

It

has no way of

distinguishing between important

and unimportant facts.

Therefore,

equal

time spent on trivial

evidence as well as crucial

facts. This is draw back

of

this

approach and we will see in

the coming section how to

overcome this.

Conflict

resolution

Another

important issue is conflict

resolution. This is

the question of what to

do

when

the premises of two rules

match the given facts.

Which should be fired

first? If we

fire both, they may add

conflicting facts,

e.g.

IF you

are bored

AND you

have no cash

THEN go to a

friend's place

IF you

are bored

AND you

have a credit card

THEN go watch a

movie

If both

rules are fired, you

will add conflicting recommendations to

the working

memory.

Conflict

resolution strategies

To overcome

the conflict problem stated

above, we may choose to use

on of the

following

conflict resolution

strategies:

Fire

first rule in sequence (rule

ordering in list). Using

this strategy all

the

�

rules in

the list are ordered

(the ordering imposes

prioritization). When

more

than one rule matches, we

simply fire the first in

the sequence

125

Artificial

Intelligence (CS607)

Assign

rule priorities (rule

ordering by importance). Using

this approach we

�

assign

explicit priorities to rules to

allow conflict

resolution.

More specific

rules (more premises) are

preferred over general

rules. This

�

strategy is

based on the observation

that a rule with more

premises, in a

sense,

more evidence or votes from

its premises, therefore it

should be

fired in

preference to a rule that

has less premises.

Prefer

rules whose premises were

added more recently to WM

(time-

�

stamping).

This allows prioritizing

recently added facts over

older facts.

Parallel

Strategy (view-points). Using

this strategy, we do not

actually

�

resolve

the conflict by selecting

one rule to fire. Instead,

we branch out our

execution

into a tree, with each

branch operation in parallel on

multiple

threads of

reasoning. This allows us to

maintain multiple view-points

on

the

argument concurrently

5.11.2

Backward

chaining

Backward

chaining is an inference strategy

that works backward from

a

hypothesis to a

proof. You begin with a

hypothesis about what the

situation might

be.

Then you prove it using

given facts, e.g. a doctor

may suspect some

disease

and proceed by

inspection of symptoms. In backward

chaining terminology,

the

hypothesis to

prove is called the

goal.

Approach

1. Start

with the goal.

2. Goal may be in

WM initially, so check and you

are done if found!

3. If not,

then search for goal in

the THEN part of the rules

(match

conclusions,

rather than premises). This

type of rule is called goal

rule.

4. Check to

see if the goal rule's

premises are listed in the

working memory.

5. Premises

not listed become sub-goals

to

prove.

6. Process

continues in a recursive

fashion

until a premise is found

that is

not

supported by a rule, i.e. a

premise is called a primitive, if it cannot

be

concluded by

any rule

7. When a

primitive is found, ask user

for information about it.

Back track and

use

this information to prove

sub-goals and subsequently the

goal.

As you

look at the example for

backward chaining below,

notice how the

approach of

backward chaining is like

depth first search.

Backward chaining

example

Consider

the same example of doctor

and patient that we looked

at previously

Rules

126

Artificial

Intelligence (CS607)

Rule

1

IF

The

patient has deep

cough

AND

We suspect an

infection

THEN

The

patient has Pneumonia

Rule

2

IF

The

patient's temperature is above

100

THEN

Patient

has fever

Rule

3

IF

The

patient has been sick

for over a fortnight

AND

The

patient has fever

THEN

We suspect an

infection

Goal

Patient

has Pneumonia

Step

Description

Working

Memory

1

Goal:

Patient has pneumonia. Not in

working

memory

2

Find

rules with goal in

conclusion:

Rule

1

3

See if

rule 1, premise 1 is known,

"the patient

has a

deep cough

4

Find

rules with this statement in

conclusion. Deep

cough

No rule

found. "The patient has a

deep

cough" is

a primitive. Prompt

patient.

Response:

Yes.

5

See if

rule 1, premise 2 is known,

"We Deep cough

suspect an

infection"

6

This is in

conclusion of rule 3. See if rule 3,

Deep cough

premise 1 is

known, "The patient has

been

sick

for over a fortnight"

7

This is a

primitive. Prompt patient.

Response: Deep cough

Yes

Sick

over a month

8

See if

rule 3, premise 2 is known,

"The Deep cough

patient

has a fever"

Sick

over a month

9

This is

conclusion of rule 2. See if rule 2,

Deep cough

premise 1

is known, "Then

patients Sick over a

month

temperature is

above 100"

127

Artificial

Intelligence (CS607)

10

This is a

primitive. Prompt patient.

Response: Deep cough

Yes.

Fire Rule

Sick

over a month

Fever

11

Rule 3

fires

Deep

cough

Sick

over a month

Fever

Infection

12

Rule 1

fires

Deep

cough

Sick

over a month

Fever

Infection

Pneumonia

5.11.3

Forward vs. backward

chaining

The exploration of

knowledge has different

mechanisms in forward and

backward

chaining.

Backward chaining is more

focused and tries to avoid

exploring

unnecessary

paths of reasoning. Forward

chaining, on the other hand

is like an

exhaustive

search.

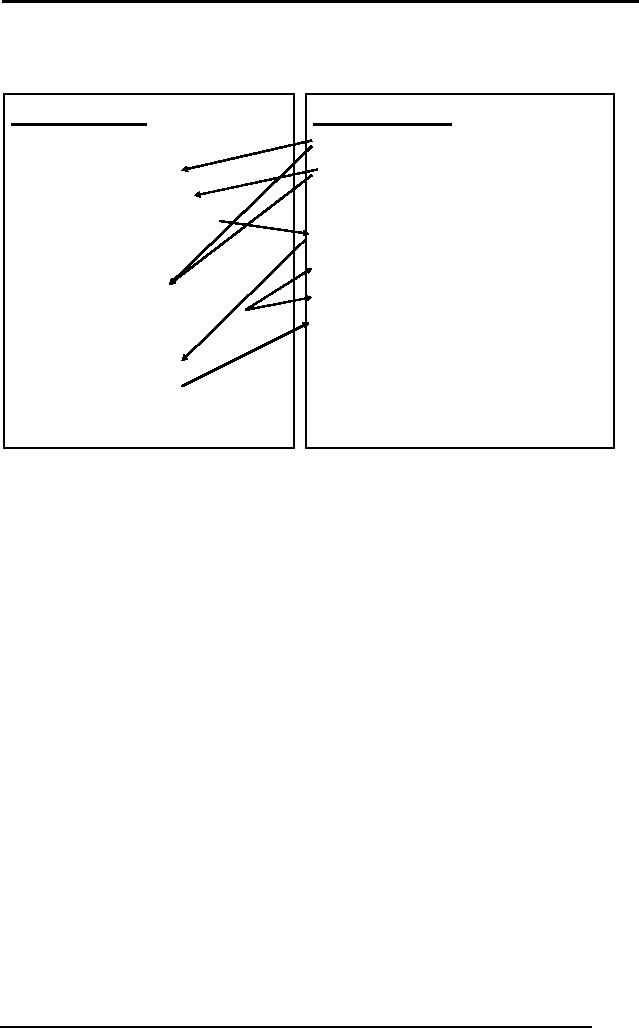

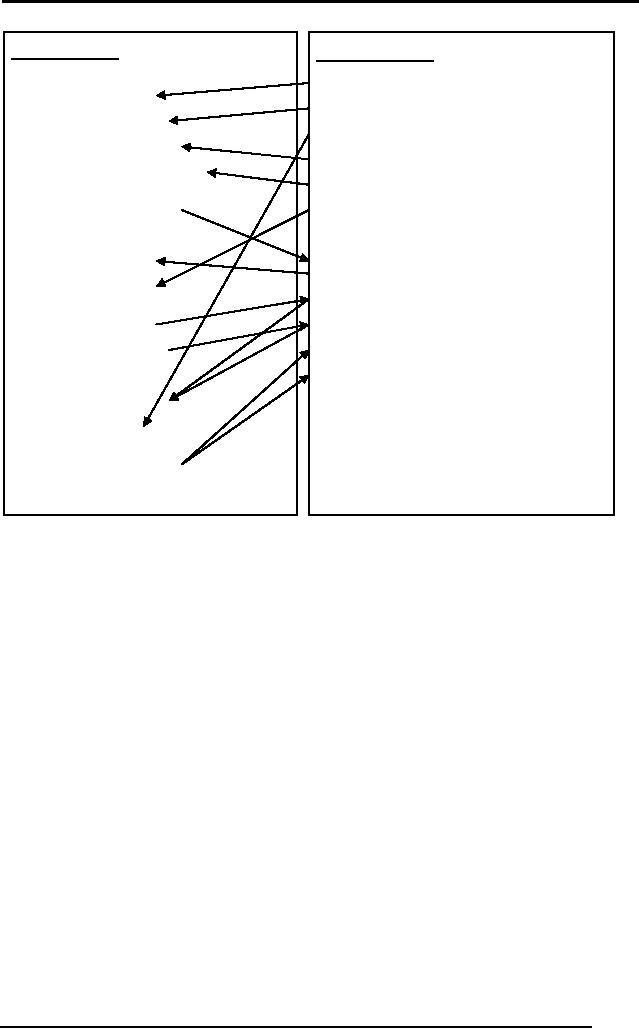

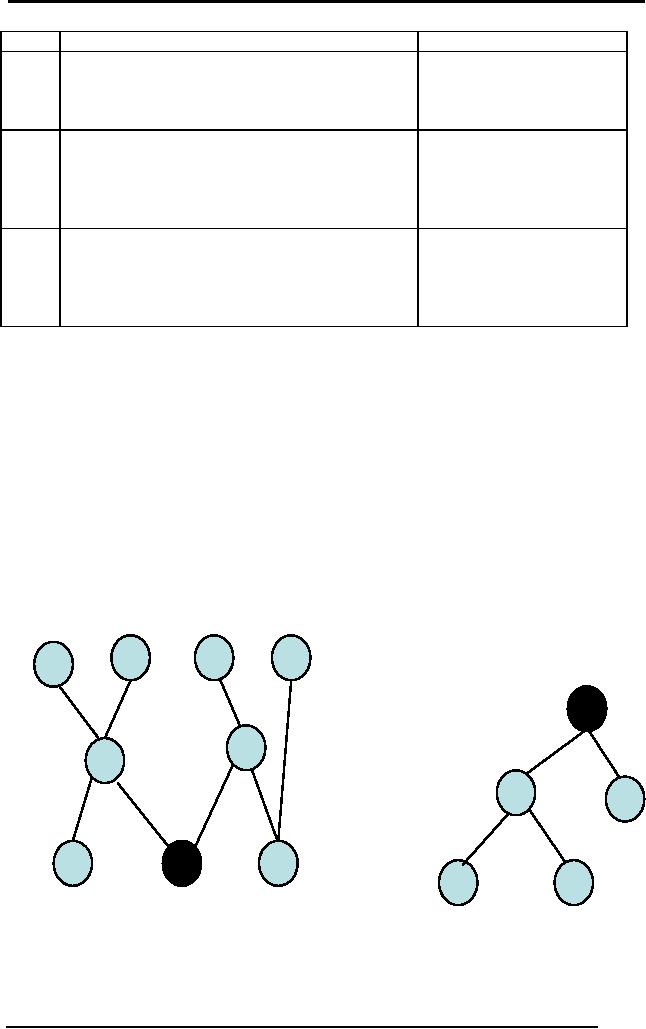

In the

figures below, each node

represents a statement. Forward

chaining starts

with

several facts in the working

memory. It uses rules to

generate more facts.

In

the

end, several facts have

been added, amongst which

one or more may be

relevant.

Backward chaining however,

starts with the goal

state and tries to

reach

down to

all primitive nodes (marked

by `?'), where information is

sought from the

user.

?

?

?

Figure:

Forward chaining

Figure:

Backward Chaining

128

Artificial

Intelligence (CS607)

5.12

Design of expert systems

We will now

look at software engineering

methodology for developing

practical

ES.

The general stages of the

expert system development

lifecycle or ESDLC

are

Feasibility

study

�

Rapid

prototyping

�

Alpha

system (in-house

verification)

�

Beta

system (tested by

users)

�

Maintenance

and evolution

�

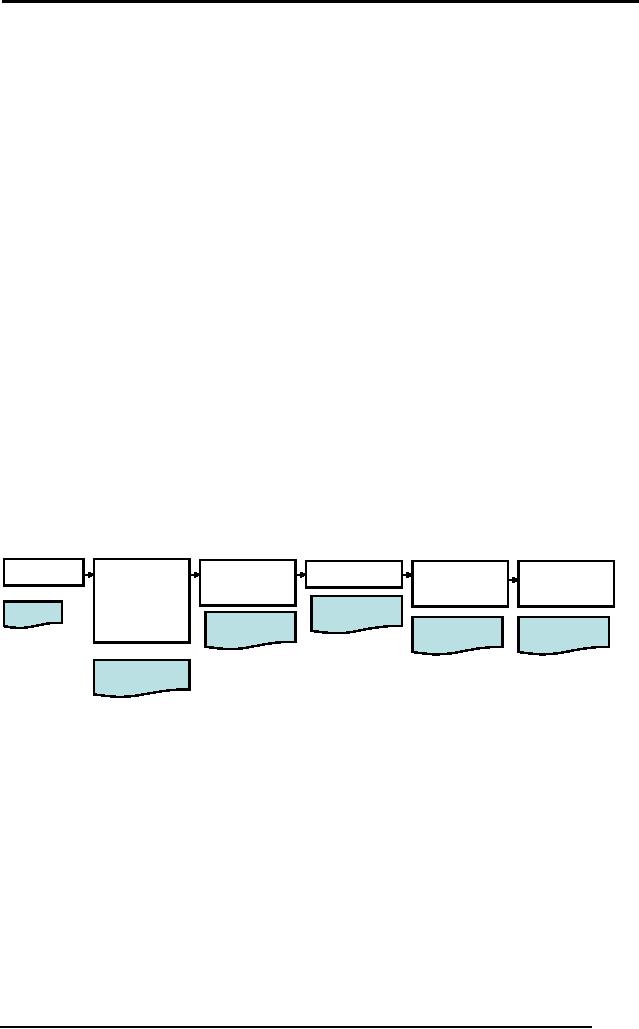

Linear

model

The Linear

model (Bochsler 88) of

software development has

been successfully

used in

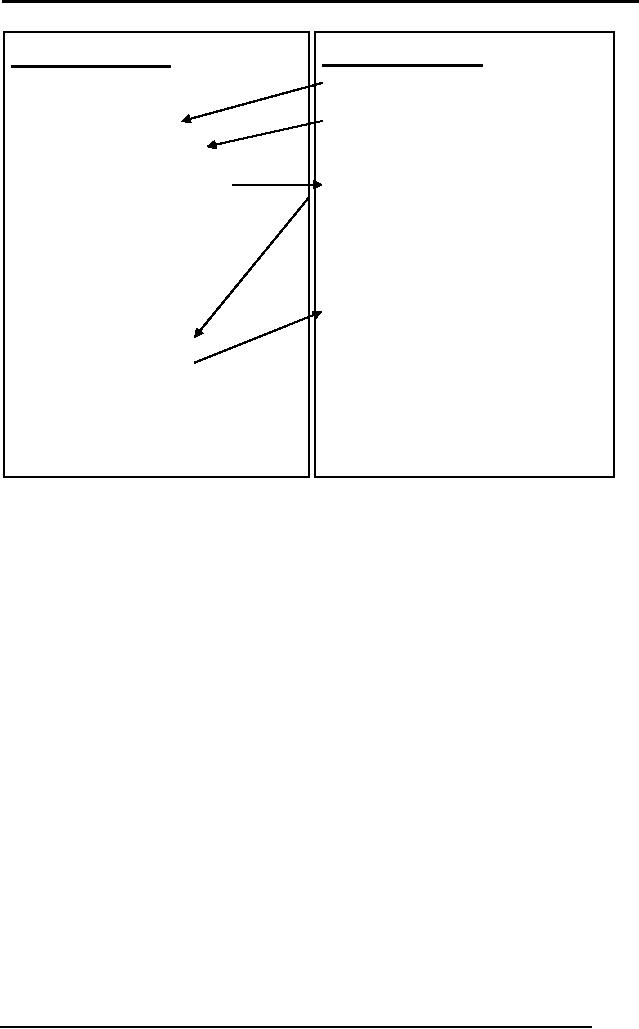

developing expert systems. A

linear sequence of steps is

applied

repeatedly in an

iterative fashion to develop

the ES. The main phases of

the

linear

sequence are

Planning

�

Knowledge

acquisition and analysis

�

Knowledge

design

�

Code

�

Knowledge

verification

�

System

evaluation

�

Planning

Knowledge

Knowledge

Code

Knowledge

System

Acquisition

Design

Verification

Evaluation

Encoding of

knowledge

and

Work

Using a

development

tool

Design

Plan

Analysis

Product

evaluation by

Formal

in-house testing

Baseline

users

Knowledge

Baseline

Figure:

Linear Model for ES

development

5.12.1

Planning

phase

This

phase involves the following

steps

Feasibility

assessment

�

Resource

allocation

�

Task

phasing and scheduling

�

Requirements

analysis

�

5.12.2

Knowledge

acquisition

129

Artificial

Intelligence (CS607)

This is

the most important stage in

the development of ES.

During this stage

the

knowledge

engineer works with the

domain expert to acquire,

organize and

analyze

the domain knowledge for

the ES. `Knowledge

acquisition is the

bottleneck in

the construction of expert

systems' (Hayes-Roth et al.). The

main

steps in

this phase are

Knowledge

acquisition from

expert

�

Define

knowledge acquisition strategy

(consider various

options)

�

Identify

concrete knowledge

elements

�

Group and

classify knowledge. Develop

hierarchical representation

where

�

possible.

Identify

knowledge source, i.e.

expert in the domain

�

o Identify

potential sources (human

expert, expert

handbooks/

manuals),

e.g. car mechanic expert

system's knowledge

engineer

may chose a

mix of interviewing an expert

mechanic and using a

mechanics

trouble-shooting manual.

Tip:

Limit the number of

knowledge sources (experts)

for simple

domains to

avoid scheduling and view

conflicts. However, a

single

expert

approach may only be applicable to

restricted small

domains.

o Rank by

importance

o Rank by

availability

o Select

expert/panel of experts

o If more

than one expert has to be

consulted, consider a

blackboard

system,

where more than one

knowledge source (kept

partitioned),

interact

through an interface called a

Blackboard

5.12.3

Knowledge acquisition

techniques

Knowledge

elicitation by interview

�

Brainstorming

session with one or more

experts. Try to introduce

some

�

structure to

this session by defining the

problem at hand, prompting

for

ideas and

looking for converging lines

of thought.

Electronic

brainstorming

�

On-site

observation

�

Documented

organizational expertise, e.g.

troubleshooting manuals

�

5.12.4

Knowledge

elicitation

Getting

knowledge from the expert is

called knowledge

elicitation vs. the

broader

term knowledge

acquisition. Elicitation

methods may be broadly divided

into:

Direct

Methods

�

o Interviews

Very

good at initial

stages

Reach a

balance between structured

(multiple choice,

rating

scale)

and un-structured

interviewing.

Record

interviews (transcribe or

tape)

Mix of open and

close ended questions

130

Artificial

Intelligence (CS607)

o Informal

discussions (gently control

digression, but do not

offend

expert by

frequent interruption)

Indirect

methods

�

o Questionnaire

Problems

that may be faced and have to be

overcome during elicitation

include

Expert may

not be able to effectively

articulate his/her

knowledge.

�

Expert may

not provide relevant

information.

�

Expert may

provide incomplete

knowledge

�

Expert may

provide inconsistent or incorrect

knowledge

�

5.12.5

Knowledge

analysis

The goal of

knowledge analysis is to analyze and

structure the knowledge

gained

during

the knowledge acquisition

phase. The key steps to be followed

during this

stage

are

Identify

specific knowledge elements, at

the level of concepts,

entities, etc.

�

From

the notes taken during

the interview sessions,

extract specific

�

o Identify

strategies (as a list of

points)

o Translate

strategies to rules

o Identify

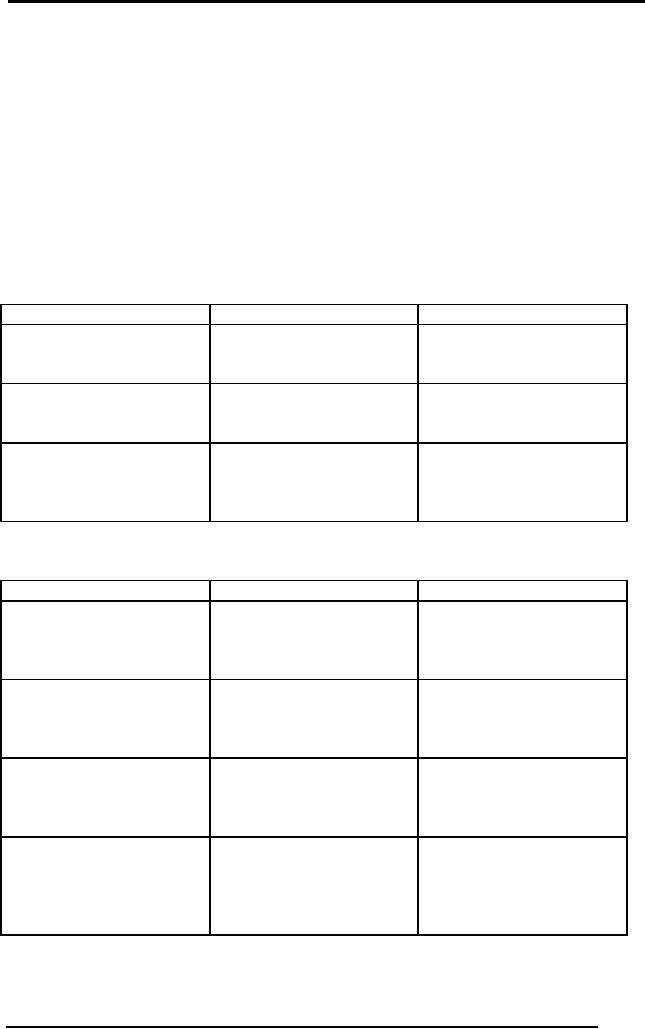

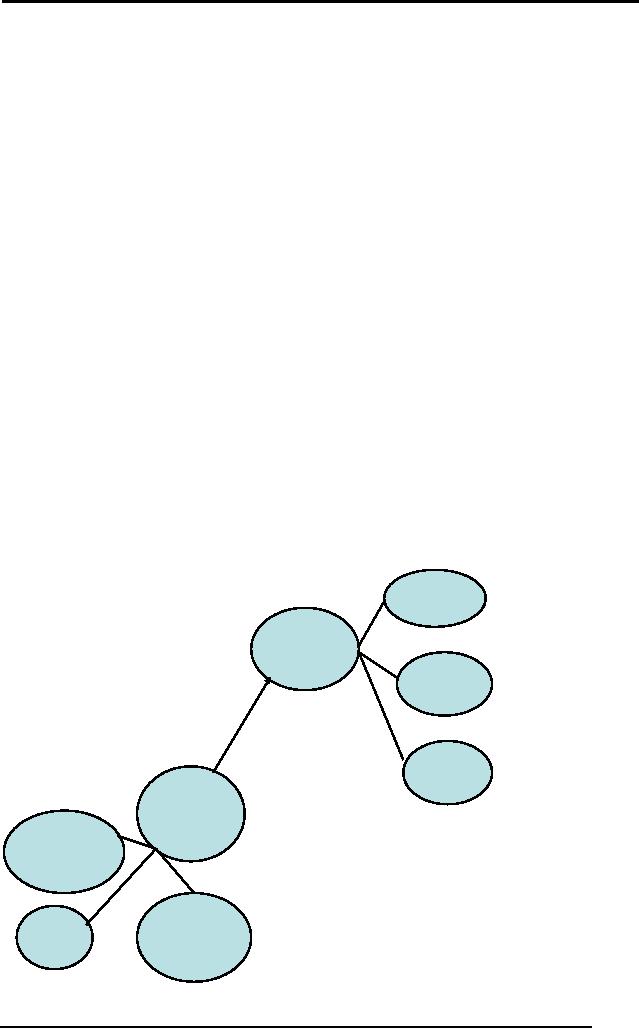

heuristics

o Identify

concepts

o Represent

concepts and their

relationships using some

visual

mechanism

like cognitive maps

Age

Patient

Medical

History

Gets

Personal

History

Tests

Echo

Cardiogram

Blood

Blood

Hematology

Sugar

131

Artificial

Intelligence (CS607)

Figure:

Cognitive Map example

The example

cognitive map for the

domain of medicine shows

entities and their

relationships.

Concepts and sub-concepts are

identified and grouped together

to

understand

the structure of the

knowledge better. Cognitive

maps are usually

used to

represent static

entities.

Inference

networks

Inference

networks encode the

knowledge of rules and

strategies.

Diagnosis

is

Anemia

AND

Blood

test shows

low

hemoglobin

Symptoms

level

indicate

anemia

OR

OR

Feeling

Listless

Inner

surface of

Consistent

Low

eyes

lids pale

Blood

Pressure

Figure:

Inference Network

Example

Flowcharts

Flow

charts also capture

knowledge of strategies. They may be

used to represent

a sequence of

steps that depict the

order of application of rule

sets. Try making a

flow

chart that depicts the

following strategy. The doctor

begins by asking

symptoms. If

they are not indicative of

some disease the doctor

will not ask

for

specific

tests. If it is symptomatic of two or

three potential diseases,

the doctor

decides

which disease to check for

first and rules out

potential diagnoses in

some

heuristic

sequence.

132

Artificial

Intelligence (CS607)

5.12.6

Knowledge

design

After

knowledge analysis is done, we

enter the knowledge design

phase. At the

end of this

phase, we have

Knowledge

definition

�

Detailed

design

�

Decision of

how to represent

knowledge

�

o Rules

and Logic

o Frames

Decision of a

development tool. Consider

whether it supports your

planned

�

strategy.

Internal

fact structure

�

Mock

interface

�

5.12.7

Code

This

phase occupies the least

time in the ESDLC. It

involves coding,

preparing

test

cases, commenting code,

developing user's manual and

installation guide. At

the end of

this phase the system is

ready to be tested.

5.12.8

CLIPS

We will

now look at a tool for

expert system development.

CLIPS stands for C

Language

Integrated Production System.

CLIPS is an expert system

tool which

provides a

complete environment for the

construction of rule and

object based

expert

systems.Download CLIPS

for windows

(CLIPSWin.zip) from:

http://www.ghg.net/clips/download/executables/pc/.

Also download the

complete

documentation

including

the

programming

guide

from:

http://www.ghg.net/clips/download/documentation/

The guides

that you download will

provide comprehensive guidance

on

programming

using CLIP. Here are

some of the basics to get

you started

Entering and

Exiting CLIPS

When

you start executable, you

will see prompt

CLIPS>

Commands

can be entered here

To leave

CLIPS, enter

(exit)

All

commands use ( ) as delimiters,

i.e. all commands are

enclosed in brackets.

A simple

command example for adding

numbers

CLIPS> (+ 3

4)

Fields

133

Artificial

Intelligence (CS607)

Fields

are the main types of

fields/tokens that can be

used with clips. They

can

be:

Numeric

fields: consist of sign,

value and exponent

�

o Float

.e.g. 3.5e-10

o Integer

e.g. -1 , 3

Symbol:

ASCII characters, ends with

delimiter. e.g.

family

�

String:

Begins and ends with double

quotation marks, "Ali is

Ahmed's

�

brother"

Remember

that CLIPS is case

sensitive

The Deftemplate

construct

Before

facts can be added, we have

to define the format for

our relations.Each

relation

consists of: relation name,

zero or more slots

(arguments of the

relation)

The Deftemplate

construct defines a relation's

structure

(deftemplate

<relation-name> [<optional

comment>]

<slot-definition>

e.g.

CLIPS> (

deftemplate father "Relation

father"

(slot

fathersName)

(slot

sonsName) )

Adding

facts

Facts

are added in the predicate

format. The deftemplate construct is

used to

inform

CLIPS of the structure of

facts. The set of all

known facts is called the

fact

list. To add

facts to the fact list,

use the assert command,

e.g.

Facts to

add:

man(ahmed)

father(ahmed,

belal)

brother(ahmed,

chand)

CLIPS>

(assert ( man ( name "Ahmed"

) ) )

CLIPS>(assert (

father ( fathersName "Ahmed")

(sonsName "Belal") ) )

Viewing fact

list

After

adding facts, you can see

the fact list using

command: (facts). You will

see

that a

fact index is assigned to

each fact, starting with 0.

For long fact lists,

use

the

format

(facts

[<start> [<end>]])

For

example:

(facts 1

10) lists fact numbers 1

through 10

Removing

facts

The retract

command is used to remove or

retract facts. For

example:

(retract 1)

removes fact 1

134

Artificial

Intelligence (CS607)

(retract 1 3)

removes fact 1 and 3

Modifying and duplicating

facts

We add a

fact:

CLIPS>(assert (

father ( fathersName "Ahmed")

(sonsName "Belal") ) )

To modify

the fathers name slot,

enter the following:

CLIPS>

(modify 2 ( fathersName "Ali

Ahmed"))

Notice

that a new index is assigned

to the modified fact

To duplicate a

fact, enter:

CLIPS>

(duplicate 2 (name "name")

)

The WATCH

command

The WATCH command

is used for debugging

programs. It is used to view

the

assertion and

modification of facts. The

command is

CLIPS>

(watch facts)

After

entering this command, for

subsequent commands, the

whole sequence of

events

will be shown. To turn off

this option, use:

(unwatch

facts)

The DEFFACTS

construct

These

are a set of facts that

are automatically asserted

when the (reset)

command is

used, to set the working

memory to its initial state.

For example:

CLIPS>

(deffacts myFacts "My known

facts

( man (

name "Ahmed" ) )

(father

(fathersName"Ahmed")

(sonsName

"Belal") ) )

The Components of a

rule

The Defrule

construct is used to add

rules. Before using a rule

the component

facts

need to be defined. For

example, if we have the

rule

IF Ali is

Ahmed's father

THEN Ahmed is

Ali's son

We enter

this into CLIPS using

the following

construct:

;Rule

header

(defrule

isSon "An example

rule"

135

Artificial

Intelligence (CS607)

;

Patterns

(father

(fathersName "ali") (sonsName

"ahmed")

;THEN

=>

;Actions

(assert

(son (sonsName "ahmed")

(fathersName "ali")))

)

CLIPS

attempts to match the

pattern of the rules against

the facts in the fact

list.

If all

patterns of a rule match,

the rule is activated, i.e.

placed on the agenda.

Agenda driven control and

execution

The agenda

is

the list of activated rules.

We use the run command to

run the

agenda.

Running the agenda causes

the rules in the agenda to

be fired.

CLIPS>(run)

Displaying the

agenda

To display

the set of rules on the

agenda, enter the

command

(agenda)

Watching

activations and rules

You can

watch activations in the

agenda by entering

(watch

activations)

You can

watch rules firing

using

(watch

rules)

All

subsequent activations and firings

will be shown until you turn

the watch off

using

the unwatch command.

Clearing all

constructs

(clear)

clears the working

memory

The PRINTOUT

command

Instead of

asserting facts in a rule, you

can print out messages

using

(printout t

"Ali is Ahmed's son"

crlf)

The SET-BREAK

command

This is a

debugging command that

allows execution of an agenda to

halt at a

specified

rule (breakpoint)

(set-break

isSon)

Once

execution stops, run is used

to resume it again.

136

Artificial

Intelligence (CS607)

(remove-break

isSon) is used to remove the

specified breakpoint.

Use

(show-breaks) to view all

breakpoints.

Loading and saving

constructs

Commands

cannot be loaded from a

file; they have to be

entered at the

command

prompt. However constructs

like deftemplate, deffacts and

defrules

can be

loaded from a file that

has been saved using

.clp extension. The

command to

load the file

is:

(load

"filename.clp")

You can

write out constructs in file

editor, save and load. Also

(save

"filename.clp")

saves all constructs

currently loaded in CLIPS to

the specified file.

Pattern

matching

Variables in

CLIPS are preceded by ?,

e.g.

?speed

?name

Variables

are used on left hand

side of a rule. They are

bound to different

values

and once

bound may be referenced on the

right hand side of a rule.

Multi-field

wildcard

variables may be bound to one or more

field of a pattern. They

are

preceded by $?

e.g. $?name will match to

entire name(last, middle and

first)

Below

are some examples to help

you see the above concept in

practice:

Example 1

;This is a

comment, anything after a

semicolon is a comment

;Define

initial facts

(deffacts

startup (animal dog) (animal

cat) (animal duck) (animal

turtle)(animal horse)

(warm-

blooded

dog) (warm-blooded cat)

(warm-blooded duck) (lays-eggs

duck) (lays-eggs turtle)

(child-

of dog

puppy) (child-of cat kitten)

(child-of turtle

hatchling))

;Define a

rule that prints animal

names

(defrule

animal (animal ?x) =>

(printout t "animal found: " ?x

crlf))

;Define a

rule that identifies

mammals

(defrule

mammal

(animal

?name)

(warm-blooded

?name)

(not

(lays-eggs ?name))

=>

(assert

(mammal ?name))

(printout t

?name " is a mammal"

crlf))

;Define a

rule that adds

mammals

(defrule

mammal2

(mammal

?name)

(child-of

?name ?young)

=>

(assert

(mammal ?young))

(printout t

?young " is a mammal"

crlf))

137

Artificial

Intelligence (CS607)

;Define a

rule that removes mammals

from fact list

;(defrule

remove-mammals

; ?fact <-

(mammal ?)

; =>

; (printout t

"retracting " ?fact

crlf)

; (retract

?fact))

;

;Define

rule that adds child's

name after asking

user

(defrule

what-is-child

(animal

?name)

(not

(child-of ?name ?))

=>

(printout t

"What do you call the

child of a " ?name

"?")

(assert

(child-of ?name

(read))))

Example 2

;OR

example

;note:

CLIPS operators use prefix

notation

(deffacts

startup (weather

raining))

(defrule

take-umbrella

(or

(weather raining)

(weather

snowing))

=>

(assert

(umbrella required)))

These

two are very basic

examples. You will find many

examples in the CLIPS

documentation

that you download. Try out

these examples.

Below is

the code for the

case study we discussed in

the lectures, for

the

automobile

diagnosis problem discussion

that is given in Durkin's

book. This is an

implementation of

the solution. (The solution

is presented by Durkin as rules

in

your

book).

;Helper

functions for asking user

questions

(deffunction

ask-question (?question

$?allowed-values)

(printout t

?question)

(bind

?answer (readline))

(while

(and (not (member ?answer

?allowed-values)) (not(eq ?answer

"q"))) do

(printout t

?question)

(bind

?answer (readline)))

(if

(eq ?answer "q")

then

(clear))

?answer)

(deffunction

yes-or-no-p (?question)

(bind

?response (ask-question ?question

"yes" "no" "y" "n"))

(if

(or (eq ?response "yes")

(eq ?response "y"))

then

TRUE

else

FALSE))

;startup

rule

138

Artificial

Intelligence (CS607)

(deffacts

startup (task begin))

(defrule

startDiagnosis

?fact <-

(task begin)

=>

(retract

?fact)

(assert

(task test_cranking_system))

(printout t

"Auto Diagnostic Expert

System" crlf)

)

;---------------------------------

;Test

Display Rules

;---------------------------------

(defrule

testTheCrankingSystem

?fact <-

(task test_cranking_system)

=>

(printout t

"Cranking System Test"

crlf)

(printout t

"--------------------" crlf)

(printout t "I

want to first check out

the major components of the

cranking system. This

includes

such items as the battery,

cables, ignition switch and

starter. Usually, when a car

does

not

start the problem can be

found with one of

these components"

crlf)

(printout t

"Steps: Please turn on the

ignition switch to energize

the starting motor"

crlf)

(bind

?response

(ask-question

"How does your engine

turn: (slowly or not at

all/normal)? "

"slowly or

not at all" "normal")

)

(assert(engine_turns

?response))

)

(defrule

testTheBatteryConnection

?fact <-

(task test_battery_connection)

=>

(printout t

"Battery Connection Test"

crlf)

(printout t

"-----------------------" crlf)

(printout t "I

next want to see if the

battery connections are

good. Often, a bad

connection

will

appear like a bad battery"

crlf)

(printout t

"Steps: Insert a screwdriver

between the battery post

and the cable

clamp.

Then

turn the headlights on high

beam and observe the

lights as the screwdriver is

twisted." crlf)

(bind

?response

(ask-question

"What happens to the lights:

(brighten/don't brighten/not on)?

"

"brighten"

"don't brighten" "not on")

)

(assert(screwdriver_test_shows_that_lights

?response))

)

(defrule

testTheBattery

?fact <-

(task test_battery)

=>

(printout t

"Battery Test" crlf)

(printout t

"------------" crlf)

(printout t

"The state of the battery

can be checked with a

hydrometer. This is a good

test

to determine

the amount of charge in the

battery and is better than a

simple voltage

measurement"

crlf)

(printout t

"Steps: Please test each

battery cell with the

hydrometer and note each

cell's

specific

gravity reading."

crlf)

(bind

?response

(ask-question

"Do all cells have a

reading above 1.2: (yes/no)?

"

"yes"

"no" "y" "n") )

(assert(battery_hydrometer_reading_good

?response))

)

(defrule

testTheStartingSystem

139

Artificial

Intelligence (CS607)

?fact <-

(task test_starting_system)

=>

(printout t

"Starting System Test"

crlf)

(printout t

"--------------------" crlf)

(printout t

"Since the battery looks

good, I want to next test

the starter and solenoid"

crlf)

(printout t

"Steps: Please connect a

jumper from the battery

post of the solenoid to

the

starter

post of hte solenoid. Then

turn the ignition key."

crlf)

(bind

?response

(ask-question

"What happens after you

make this connection and

turn the key:

(engine

turns normally/starter buzzes/engine

turns slowly/nothing)? "

"engine

turns normally" "starter

buzzes" "engine turns

slowly" "nothing" ))

(assert(starter

?response))

)

(defrule

testTheStarterOnBench

?fact <-

(task test_starter_on_bench)

=>

(bind

?response

(ask-question

"Check your starter on

bench: (meets specifications/doesn't

meet

specifications)?

"

"meets

specifications" "doesn't meet

specifications") )

(assert(starter_on_bench

?response))

)

(defrule

testTheIgnitionOverrideSwitch

?fact <-

(task

test_ignition_override_switches)

=>

(bind

?response