|

EVOLUTION OF COMPUTING |

| << INTRODUCTION |

| World Wide Web, Web’s structure, genesis, its evolution >> |

Introduction

to Computing CS101

VU

LESSON

2

EVOLUTION

OF COMPUTING

Today's

Goal

To

learn about the evolution of

computing

To recount the

important and key

events

To

identify some of the milestones in computer

development

Babbage's

Analytical Engine -

1833

Mechanical,

digital, general-purpose

Was

crank-driven

Could

store instructions

Could

perform mathematical calculations

Had

the ability to print

Could

punched cards as permanent memory

Invented

by Joseph-Marie Jacquard

2.1

Turing

Machine 1936

Introduced

by Alan Turing in 1936,

Turing machines are one of

the key abstractions used in

modern

computability

theory, the study of what

computers can and cannot do. A

Turing machine is a

particularly

simple kind of computer, one whose

operations are limited to reading

and writing symbols

on a tape, or

moving along the tape to the

left or right. The tape is

marked off into squares,

each of

which

can be filled with at most

one symbol. At any given

point in its operation, the

Turing machine

can

only read or write on one of

these squares, the square

located directly below its

"read/write" head.

2.2

The

"Turing test"

A

test proposed to determine if a computer

has the ability to think. In

1950, Alan Turing (Turing,

1950)

proposed a

method for determining if

machines can think. This

method is known as The Turing

Test.

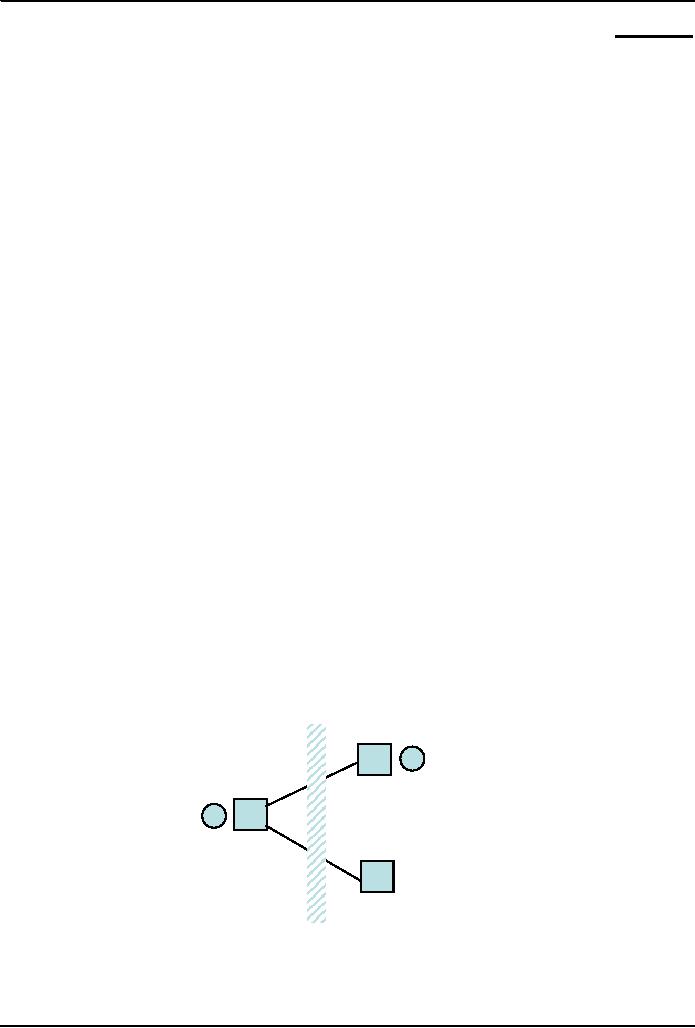

The

test is conducted with two

people and a machine. One person

plays the role of an interrogator and

is

in a

separate room from the

machine and the other person. The

interrogator only knows the

person and

machine as A

and B. The interrogator does

not know which the person is

and which the machine is.

Using

a teletype, the interrogator, can

ask A and B any question

he/she wishes. The aim of

the

interrogator

is to determine which

the person is and which

the machine is.

The

aim of the machine is to fool the

interrogator into thinking

that it is a person. If the

machine

succeeds

then we can conclude that

machines can think.

Terminal

Human

providing

answers

Terminal

Interrogator

asking

questions

Computer

on its

own

Computer

providing

answers

2.3

Tube

1904:

Vacuum

A vacuum

tube is just that: a glass

tube surrounding a vacuum (an area

from which all gases

has been

removed).

What makes it interesting is

that when electrical

contacts are put on the

ends, you can get a

current

to flow though that

vacuum.

5

Introduction

to Computing CS101

VU

A

British scientist named John A. Fleming

made a vacuum tube known

today as a diode. Then the

diode

was

known as a "valve,"

2.4

ABC

1939

The

Atanasoff-Berry Computer was the

world's first electronic

digital computer. It was built by

John

Vincent

Atanasoff and Clifford Berry

at Iowa State University

during 1937-42. It incorporated

several

major

innovations in computing including the

use of binary arithmetic,

regenerative memory,

parallel

processing,

and separation of memory and computing

functions.

2.5

Harvard

Mark 1 1943:

Howard

Aiken and Grace Hopper

designed the MARK series of computers at

Harvard University.

The

MARK

series of computers began

with the Mark I in 1944.

Imagine a giant roomful of

noisy, clicking

metal

parts, 55 feet long and 8

feet high. The 5-ton

device contained almost 760,000

separate pieces.

Used by the US

Navy for gunnery and

ballistic calculations, the Mark I

was in operation until

1959.

The

computer, controlled by pre-punched paper tape, could

carry out addition,

subtraction,

multiplication,

division and reference to previous

results. It had special subroutines for

logarithms and

trigonometric

functions and used 23 decimal place

numbers. Data was stored and

counted mechanically

using

3000 decimal storage wheels,

1400 rotary dial switches,

and 500 miles of wire.

Its

electromagnetic

relays classified the machine as a relay computer. All

output was displayed on

an

electric

typewriter. By today's standards, the

Mark I was slow, requiring

3-5 seconds for a

multiplication

operation

2.6

ENIAC

1946:

ENIAC

I (Electrical

Numerical

Integrator

And Calculator).

The U.S. military sponsored

their research;

they

needed a calculating device

for writing artillery-firing

tables (the settings used

for different

weapons

under varied conditions for

target accuracy).

John

Mauchly was the chief consultant and J

Presper Eckert was the chief

engineer. Eckert was

a

graduate student

studying at the Moore School

when he met John Mauchly in

1943. It took the

team

about

one year to design the ENIAC and 18

months and 500,000 tax

dollars to build it.

The

ENIAC contained 17,468 vacuum

tubes, along with 70,000

resistors and 10,000 capacitors.

2.7

Transistor

1947

The

first transistor was invented at

Bell Laboratories on December

16, 1947 by William

Shockley. This

was

perhaps the most important electronics

event of the 20th century, as it

later made possible the

integrated

circuit and microprocessor that

are the basis of modern electronics.

Prior to the transistor the

only

alternative to its current

regulation and switching functions

(TRANSfer resISTOR) was

the

vacuum

tubes, which could only be

miniaturized to a certain extent, and

wasted a lot of energy in

the

form

of heat.

Compared to vacuum

tubes, it offered:

smaller

size

better

reliability

lower

power consumption

lower

cost

2.8

Floppy

Disk 1950

Invented

at the Imperial University in Tokyo by

Yoshiro Nakamats

6

Introduction

to Computing CS101

VU

2.9

UNIVAC 1

1951

UNIVAC-1.

The first commercially

successful electronic computer, UNIVAC I,

was also the first

general

purpose computer - designed to handle

both numeric and textual

information. It was designed by

J.

Presper Eckert and John Mauchly.

The implementation of this machine marked

the real beginning of

the computer

era. Remington Rand delivered the

first UNIVAC machine to the U.S. Bureau

of Census

in

1951. This machine used magnetic

tape for input.

First

successful commercial computer design was

derived from the ENIAC (same

developers)

first

client = U.S. Bureau of the

Census

$1

million

48

systems built

2.10

Compiler

1952

Grace

Murray Hopper an employee of

Remington-Rand worked on the NUIVAC.

She took up the

concept

of reusable software in her 1952 paper

entitled "The Education of a

Computer" and developed

the

first software that could

translate symbols of higher computer languages

into machine language.

(Compiler)

2.11

ARPANET

1969

The

Advanced Research Projects

Agency was formed with an

emphasis towards research, and thus

was

not

oriented only to a military

product. The formation of

this agency was part of the

U.S. reaction to the

then

Soviet Union's launch of

Sputnik in 1957. (ARPA

draft, III-6). ARPA was

assigned to research

how

to utilize their investment in

computers via Command and

Control Research (CCR). Dr.

J.C.R.

Licklider

was chosen to head this

effort

Developed

for the US DoD Advanced

Research Projects Agency

60,000 computers connected

for

communication

among research organizations and

universities

2.12

Intel

4004 1971

The

4004 was the world's first

universal microprocessor. In the late

1960s, many scientists had

discussed

the possibility of a computer on a chip,

but nearly everyone felt

that integrated

circuit

technology

was not yet ready to support

such a chip. Intel's Ted

Hoff felt differently; he

was the first

person

to recognize that the new silicon-gated

MOS technology might make a

single-chip CPU

(central

processing

unit) possible.

Hoff

and the Intel team developed

such architecture with just

over 2,300 transistors in an

area of only 3

by 4

millimeters. With its 4-bit

CPU, command register, decoder, decoding

control, control

monitoring

of machine

commands and interim register, the 4004

was one heck of a little invention.

Today's 64-bit

microprocessors

are still based on similar

designs, and the microprocessor is

still the most

complex

mass-produced

product ever with more than

5.5 million transistors

performing hundreds of millions of

calculations

each second - numbers that

are sure to be outdated

fast.

2.13

Altair

8800 1975

By

1975 the market for the personal computer

was demanding a product that

did not require an

electrical

engineering background and thus the

first mass produced and marketed personal

computer

7

Introduction

to Computing CS101

VU

(available

both as a kit or assembled)

was welcomed with open arms.

Developers Edward Roberts,

William

Yates and Jim Bybee spent

1973-1974 to develop the MITS (Micro

Instruments Telemetry

Systems

) Altair 8800. The price

was $375, contained 256

bytes of memory (not 256k),but had

no

keyboard,

no display, and no auxiliary storage

device. Later, Bill Gates

and Paul Allen wrote their

first

product

for the Altair -- a BASIC

compiler (named after a

planet on a Star

Trek episode).

2.14

Cray

1 976

It

looked like no other computer

before, or for that matter,

since. The Cray 1 was the

world's first

"supercomputer,"

a machine that leapfrogged existing

technology when it was

introduced in 1971.

And

back then, you couldn't just

order up fast processors from

Intel. "There weren't

any

microprocessors,"

says Gwen Bell of The

Computer Museum History Center. "These

individual

integrated

circuits that are on the

board performed different

functions."

Each

Cray 1, like this one at

The Computer Museum History

Center, took months to build.

The

hundreds of

boards and thousands of wires had to

fit just right. "It was

really a hand-crafted

machine,"

adds

Bell. "You think of all

these wires as a kind of

mess, but each one

has a precise

length."

2.15

IBM PC

1981

On

August 12, 1981, IBM

released their new computer, re-named the

IBM PC. The "PC" stood

for

"personal

computer" making IBM responsible for

popularizing the term

"PC".

The

first IBM PC ran on a 4.77 MHz Intel

8088 microprocessor. The PC came

equipped with 16

kilobytes

of memory, expandable to 256k.

The PC came with one or two

160k Floppy Disks Drives

and

an

optional color monitor. The

price tag started at $1,565,

which would be nearly $4,000

today.

8

Introduction

to Computing CS101

VU

2.16

Apple

Macintosh 1984

Apple

introduced the Macintosh to the nation on

January 22, 1984. The

original Macintosh had

128

kilobytes

of RAM, although this first

model was simply called

"Macintosh" until the 512K

model came

out

in September 1984. The

Macintosh retailed for

$2495. It wasn't until the

Macintosh that the general

population

really became aware of the

mouse-driven graphical user

interface.

2.17

World

Wide Web -1989

"CERN

is a meeting place for physicists from

all over the world, who

collaborate on complex physics,

engineering

and information handling projects. Thus, the

need for the WWW system

arose "from the

geographical

dispersion of large collaborations, and the fast

turnover of fellows, students, and

visiting

scientists,"

who had to get "up to speed on projects

and leave a lasting contribution

before leaving."

CERN

possessed both the financial and

computing resources necessary to

start the project. In the

original

proposal, Berners-Lee outlined two

phases of the project:

First,

CERN would "make use of

existing software and hardware as well as

implementing simple

browsers

for the user's workstations,

based on an analysis of the requirements for

information access

needs

by experiments."

Second,

they would "extend the

application area by also

allowing the users to add new

material."

Berners-Lee

expected each phase to take three months

"with the full manpower complement": he

was

asking

for four software engineers and a

programmer. The proposal talked about "a

simple scheme to

incorporate

several different servers of machine-stored

information already available at

CERN."

Set

off in 1989, the WWW quickly

gained great popularity among Internet

users. For instance, at

11:22

am of

April 12, 1995, the WWW

server at the SEAS of the University of

Pennsylvania "responded to

128

requests in one minute. Between

10:00 and 11:00

2.18

Quantum

Computing with

Molecules

by

Neil Gershenfeld and Isaac

L. Chuang

Factoring

a number with 400 digits--a

numerical feat needed to

break some security

codes--would take

even the

fastest supercomputer in existence billions of

years. But a newly conceived

type of computer,

one

that exploits quantum-mechanical

interactions, might complete the task in

a year or so, thereby

defeating

many of the most sophisticated encryption

schemes in use. Sensitive

data are safe for the

time

being,

because no one has been able

to build a practical quantum computer.

But researchers have

now

demonstrated

the feasibility of this approach. Such a

computer would look nothing

like the machine that

sits

on your desk; surprisingly, it might

resemble the cup of coffee at its

side.

Several

research groups believe quantum

computers based on the molecules in a

liquid might one day

overcome

many of the limits facing

conventional computers. Roadblocks to

improving conventional

computers

will ultimately arise from

the fundamental physical bounds to

miniaturization (for

example,

because

transistors and electrical wiring cannot

be made slimmer than the

width of an atom). Or

they

may

come about for practical

reasons--most likely because the

facilities for fabricating

still more

powerful

microchips will become

prohibitively expensive. Yet

the magic of quantum mechanics

might

solve

both these problems.

9

Table of Contents:

- INTRODUCTION

- EVOLUTION OF COMPUTING

- World Wide Web, Web’s structure, genesis, its evolution

- Types of Computers, Components, Parts of Computers

- List of Parts of Computers

- Develop your Personal Web Page: HTML

- Microprocessor, Bus interface unit, Data & instruction cache memory, ALU

- Number systems, binary numbers, NOT, AND, OR and XOR logic operations

- structure of HTML tags, types of lists in web development

- COMPUTER SOFTWARE: Operating Systems, Device Drivers, Trialware

- Operating System: functions, components, types of operating systems

- Forms on Web pages, Components of Forms, building interactive Forms

- APPLICATION SOFTWARE: Scientific, engineering, graphics, Business, Productivity, Entertainment, Educational Software

- WORD PROCESSING: Common functions of word processors, desktop publishing

- Interactivity to Forms, JavaScript, server-side scripts

- ALGORITHMS

- ALGORITHMS: Pseudo code, Flowcharts

- JavaScript and client-side scripting, objects in JavaScript

- Low, High-Level, interpreted, compiled, structured & object-oriented programming languages

- Software Design and Development Methodologies

- DATA TYPES & OPERATORS

- SPREADSHEETS

- FLOW CONTROL & LOOPS

- DESIGN HEURISTICS. Rule of thumb learned through trial & error

- WEB DESIGN FOR USABILITY

- ARRAYS

- COMPUTER NETWORKS: types of networks, networking topologies and protocols

- THE INTERNET

- Variables: Local and Global Variables

- Internet Services: FTP, Telnet, Web, eMail, Instant messaging, VoIP

- DEVELOPING PRESENTATIONS: Effective Multimedia Presentations

- Event Handlers

- GRAPHICS & ANIMATION

- INTELLIGENT SYSTEMS: techniques for designing Artificial Intelligent Systems

- Mathematical Functions in JavaScript

- DATA MANAGEMENT

- DATABASE SOFTWARE: Data Security, Data Integrity, Integrity, Accessibility, DBMS

- String Manipulations:

- CYBER CRIME

- Social Implications of Computing

- IMAGES & ANIMATION

- THE COMPUTING PROFESSION

- THE FUTURE OF COMPUTING

- PROGRAMMING METHODOLOGY

- REVIEW & WRAP-UP of Introduction to Computing