|

HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION |

| << COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS |

| COLOR THEORY, STEREOPSIS, READING, HEARING, TOUCH, MOVEMENT >> |

Human

Computer Interaction

(CS408)

VU

Lecture

7

Lecture 7.

Human

Input-Output Channels

Part I

Learning

Goals

As the

aim of this lecture is to

introduce you the study of

Human Computer

Interaction,

so that after studying this

you will be able to:

Understand

role of input-output channels

�

Describe

human eye physiology

and

�

Discuss

the visual perception

�

7.1

Input

Output channels

A

person's interaction with

the outside world occurs

through information

being

received

and sent: input and output.

In an interaction with a computer

the user

receives

information that is output by

the computer, and responds

by providing input

to the

computer the user's

output become the computer's

input and vice versa.

Consequently

the use of the terms

input and output may

lead to confusion so we

shall

blur

the distinction somewhat and

concentrate on the channels involved.

This blurring

is

appropriate since, although a particular

channel may have a primary

role as input or

output in

the interaction, it is more

than likely that it is also

used in the other role.

For

example,

sight may be used primarily

in receiving information from

the computer, but

it can

also be used to provide information to

the computer, for example by

fixating on

a

particular screen point when

using an eye gaze

system.

Input in

human is mainly though the

senses and out put

through the motor control

of

the

effectors. There are five

major senses:

� Sight

� Hearing

� Touch

� Taste

� Smell

Of these

first three are the most

important to HCI. Taste and

smell do not

currently

play a

significant role in HCI, and

it is not clear whether they

could be exploited at

all

in

general computer systems,

although they could have a

role to play in more

specialized

systems or in augmented reality

systems. However, vision

hearing and

touch

are central.

Similarly

there are a number of

effectors:

� Limbs

54

Human

Computer Interaction

(CS408)

VU

Fingers

�

Eyes

�

Head

�

Vocal

system.

�

In the

interaction with computer,

the fingers play the

primary role, through typing

or

mouse

control, with some use of

voice, and eye, head and

body position.

Imagine

using a personal computer

with a mouse and a keyboard.

The application you

are using

has a graphical interface,

with menus, icons and

windows. In your

interaction

with this system you

receive information primarily by

sight, from what

appears

on the screen. However, you

may also receive information

by ear: for

example,

the computer may `beep' at

you if you make a mistake or

to draw attention

to

something, or there may be a

voice commentary in a multimedia

presentation.

Touch

plays a part too in that

you will feel the keys

moving (also hearing the

`click')

or the

orientation of the mouse, which

provides vital feedback

about what you

have

done.

You yourself send

information to the computer

using your hands either

by

hitting

keys or moving the mouse.

Sight and hearing do not

play a direct role in

sending

information in this example,

although they may be used to

receive

information

from a third source (e.g., a

book or the words of another

person) which is

then

transmitted to the

computer.

7.2

Vision

Human

vision is a highly complex

activity with range of physical

and perceptual

limitations,

yet it is the primary source

of information for the

average person. We can

roughly

divide visual perception

into two stages:

� the

physical reception of the

stimulus from outside world,

and

� the

processing and interpretation of that

stimulus.

On the

one hand the physical

properties of the eye and

the visual system mean

that

there

are certain things that

cannot be seen by the human;

on the other

interpretative

capabilities

of visual processing allow images to be

constructed from

incomplete

information.

We need to understand both stages as

both influence what can

and can

not be

perceived visually by a human

being, which is turn

directly affect the way

that

we design

computer system. We will begin by

looking at the eye as a

physical

receptor,

and then go onto consider

the processing involved in basic

vision.

The human

eye

Vision

begins with light. The

eye is a mechanism for receiving

light and

transforming

it into

electrical energy. Light is

reflected from objects in

the world and their

image is

focused

upside down on the back of

the eye. The receptors in

the eye transform it

into

electrical

signals, which are passed to

brain.

55

Human

Computer Interaction

(CS408)

VU

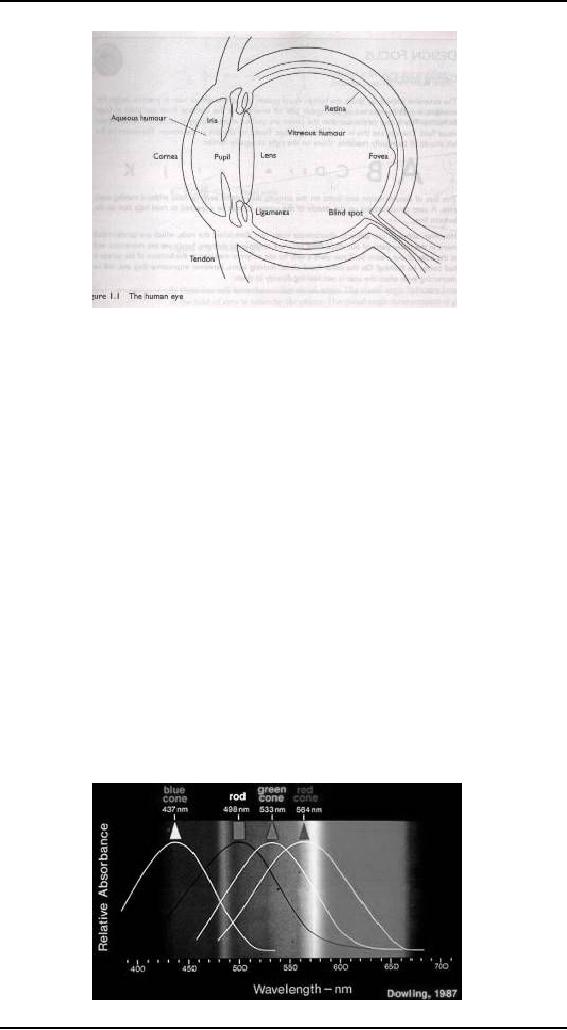

The

eye has a number of

important components as you

can see in the figure.

Let us

take a

deeper look. The cornea and

lens at the front of eye

focus the light into a

sharp

image on

the back of the eye,

the retina. The retina is

light sensitive and contains

two

types of

photoreceptor: rods and

cones.

Rods

Rods are

highly sensitive to light

and therefore allow us to

see under a low level

of

illumination.

However, they are unable to

resolve fine detail and are

subject to light

saturation.

This is the reason for

the temporary blindness we

get when moving from

a

darkened

room into sunlight: the rods

have been active and are

saturated by the

sudden

light. The cones do not

operate either as they are

suppressed by the rods. We

are

therefore temporarily unable to

see at all. There are

approximately 120

million

rods per

eye, which are mainly

situated towards the edges

of the retina. Rods

therefore

dominate

peripheral vision.

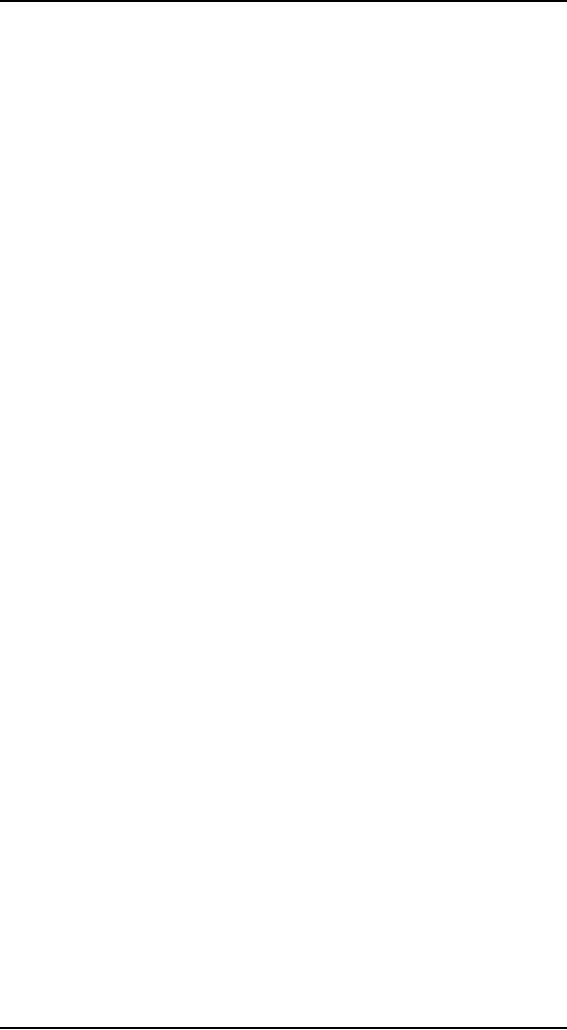

Cones

Cones

are the second type of

receptor in the eye. They

are less sensitive to light

than

the rods

and can therefore tolerate

more light. There are

three types of cone,

each

sensitive

to a different wavelength of light.

This allows color vision.

The eye has

approximately

6 million cones, mainly

concentrated on the

fovea.

56

Human

Computer Interaction

(CS408)

VU

Fovea

Fovea is

a small area of the retina

on which images are fixated.

Blind

spot

Blind

spot is also situated at retina.

Although the retina is

mainly covered with

photoreceptors

there is one blind spot

where the optic nerve

enter the eye. The

blind

spot has

no rods or cones, yet our

visual system compensates

for this so that in

normal

circumstances we

are unaware of it.

Nerve

cells

The

retina also has specialized

nerve cells called ganglion

cells. There are two

types:

X-cells

These are

concentrated in the fovea

and are responsible for

the early detection

of

pattern.

Y-cells

These are

more widely distributed in

the retina and are

responsible for the

early

detection

of movement. The distribution of

these cells means that,

while we may not

be able

to detect changes in pattern in

peripheral vision, we can

perceive movement.

7.3

Visual

perception

Understanding

the basic construction of the

eye goes some way to

explaining the

physical

mechanism of vision but visual

perception is more than

this. The information

received

by the visual apparatus must be filtered

and passed to processing elements

which

allow us to recognize coherent

scenes, disambiguate relative distances

and

differentiate

color. Let us see how we

perceive size and depth,

brightness and color,

each of

which is crucial to the

design of effective visual

interfaces.

Perceiving

size and depth

Imagine

you are standing on a

hilltop. Beside you on the

summit you can see

rocks,

sheep

and a small tree. On the

hillside is a farmhouse with

outbuilding and farm

vehicles.

Someone is on the track, walking

toward the summit. Below in

the valley is

a small

market town.

Even in

describing such a scene the

notions of size and distance

predominate. Our

visual

system is easily able to

interpret the images, which it

receives to take

account

of these

things. We can identify

similar objects regardless of the

fact that they appear

to us to be

vastly different sizes. In

fact, we can use this

information to judge

distance.

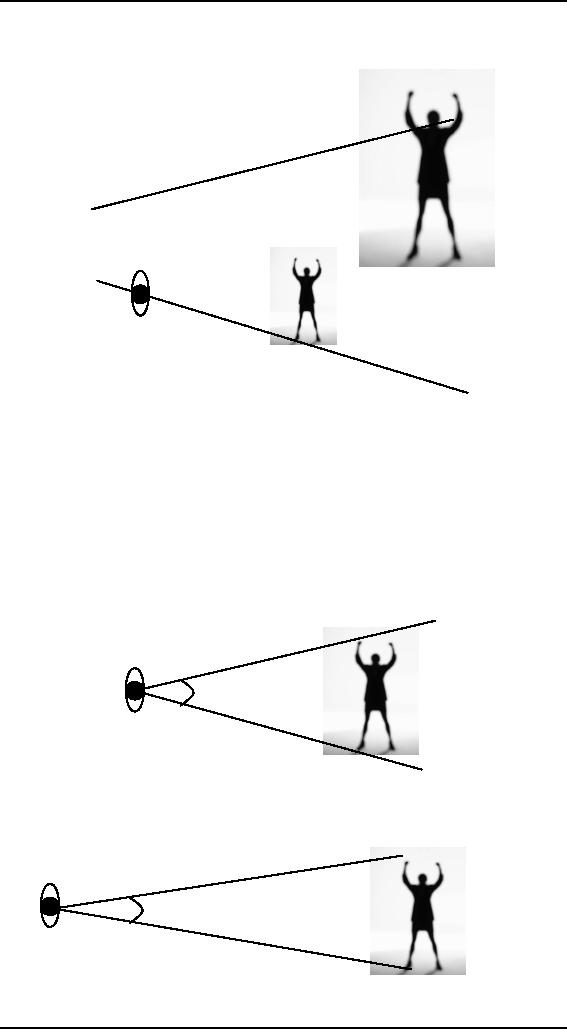

So how

does the eye perceive size,

depth and relative

distances? To understand

this

we must

consider how the image

appears on the retina. As we

mentioned, reflected

light

from the object forms an

upside-down image on the

retina. The size of

that

image is

specified as visual angle.

Figure illustrates how the

visual angle is

calculated.

57

Human

Computer Interaction

(CS408)

VU

If were

to draw a line from the

top of the object to a

central point on the front

of the

eye

and a second line from

the bottom of the object to

the same point, the

visual angle

of the

object is the angle between

these two lines. Visual

angle is affected by both

the

size of

the object and its distance

from the eye. Therefore if

two objects are at

the

same

distance, the larger one

will have the larger visual

angle. Similarly, if

two

objects

of the same size are placed

at different distances from

the eye, the furthest

one

will have

the smaller visual angle, as

shown in figure.

58

Human

Computer Interaction

(CS408)

VU

Visual

angle indicates how much of

the field of view is taken

by the object. The

visual

angle measurement is given in either

degrees or minutes of arc, where 1

degree

is

equivalent to 60 minutes of arc, and 1

minute of arc to 60 seconds of

arc.

Visual

acuity

So how

does an object's visual

angle affect our perception

of its size? First, if

the

visual

angle of an object is too

small we will be unable to perceive it at

all. Visual

acuity is

the ability of a person to perceive

fine detail. A number of

measurements

have been

established to test visual

acuity, most of which are included in

standard eye

tests.

For example, a person with

normal vision can detect a

single line if it has

a

visual

angle of 0.5 seconds of arc.

Spaces between lines can be

detected at 30 seconds

to 1

minute of visual arc. These represent the

limits of human visual

perception.

Law of

size constancy

Assuming

that we can perceive the

object, does its visual

angle affect our

perception

of its

size? Given that the

visual angle of an object is

reduced, as it gets further

away,

we might

expect that we would

perceive the object as

smaller. In fact, our

perception

of an

object's size remains constant even if

its visual angel changes. So

a person's

height I

perceived as constant even if they

move further from you.

This is the law of

size

constancy, and it indicated

that our perception of size

relies on factors other

than

the

visual angle.

One of

these factors is our

perception of depth. If we return to

the hilltop scene

there

are a

number of cues, which can

use to determine the

relative positions and

distances

of the

objects, which we see. If

objects overlap, the object

that is partially covered

is

perceived

to be in the background, and

therefore further away.

Similarly, the size

and

height of

the object in our field of

view provides a cue to its

distance. A third cue

is

familiarity:

if we expect an object to be of a certain

size then we can judge

its distance

accordingly.

Perceiving

brightness

A second

step of visual perception is

the perception of brightness.

Brightness is in fact

a

subjective reaction to level of

light. It is affected by luminance,

which is the amount

of light

emitted by an object. The

luminance of an object is dependent on

the amount

of light

falling on the object's surface

and its reflective

prosperities. Contrast is

related

to luminance: it is a function of the

luminance of an object and

the luminance

of its

background.

Although

brightness is a subjective response, it

can be described in terms of

the

amount of

luminance that gives a just

noticeable difference in brightness.

However,

the

visual system itself also

compensates for changes in

brightness. In dim

lighting,

the rods

predominate vision. Since

there are fewer rods on the

fovea, object in low

lighting

can be seen easily when

fixated upon, and are more

visible in peripheral

vision.

In normal lighting, the

cones take over.

Visual

acuity increases with increased

luminance. This may be an

argument for using

high

display luminance. However, as

luminance increases, flicker

also increases. The

eye will

perceive a light switched on

and off rapidly as

constantly on. But if the

speed

59

Human

Computer Interaction

(CS408)

VU

of

switching is less than 50 Hz

then the light is perceived

to flicker. In high

luminance

flicker

can be perceived at over 50

Hz. Flicker is also more

noticeable in peripheral

vision.

This means that the

larger the display, the

more it will appear to flicker.

Perceiving

color

A third

factor that we need to consider is

perception of color. Color is

usually

regarded

as being made up of three

components:

� hue

� intensity

� saturation

Hue

Hue is

determined by the spectral

wavelength of the light.

Blues have short

wavelength,

greens medium and reds

long. Approximately 150

different hues can be

discriminated

by the average person.

Intensity

Intensity

is the brightness of the

color.

Saturation

Saturation

is the amount of whiteness in

the colors.

By

varying these two, we can

perceive in the region of 7

million different

colors.

However,

the number of colors that

can be identified by an individual

without training

is far

fewer.

The

eye perceives color because

the cones are sensitive to

light of different

wavelengths.

There are three different

types of cone, each

sensitive to a different

color

(blue, green and red).

Color vision is best in the

fovea, and worst at

the

periphery

where rods predominate. It should also be

noted that only 3-4 % of

the

fovea is

occupied by cones which are

sensitive to blue light,

making blue acuity

lower.

Finally,

we should remember that around 8% of

males and 1% of females

suffer from

color

blindness, most commonly being

unable to discriminate between

red and green.

The

capabilities and limitations of

visual processing

In

considering the way in which

we perceive images we have already

encountered

some of

the capabilities and

limitations of the human

visual processing system.

However,

we have concentrated largely on

low-level perception. Visual

processing

involves

the transformation and

interpretation of a complete image,

from the light

that

is thrown

onto the retina. As we have

already noted, our

expectations affect the

way

an image

is perceived. For example, if we

know that an object is a

particular size, we

will

perceive it as that size no

matter how far it is from

us.

Visual

processing compensates for the

movement of the image on the

retina which

occurs as

we around and as the object

which we see moves. Although

the retinal

image is

moving, the image that we

perceive is stable. Similarly,

color and brightness

of

objects are perceived as constant, in

spite of changes in

luminance.

This

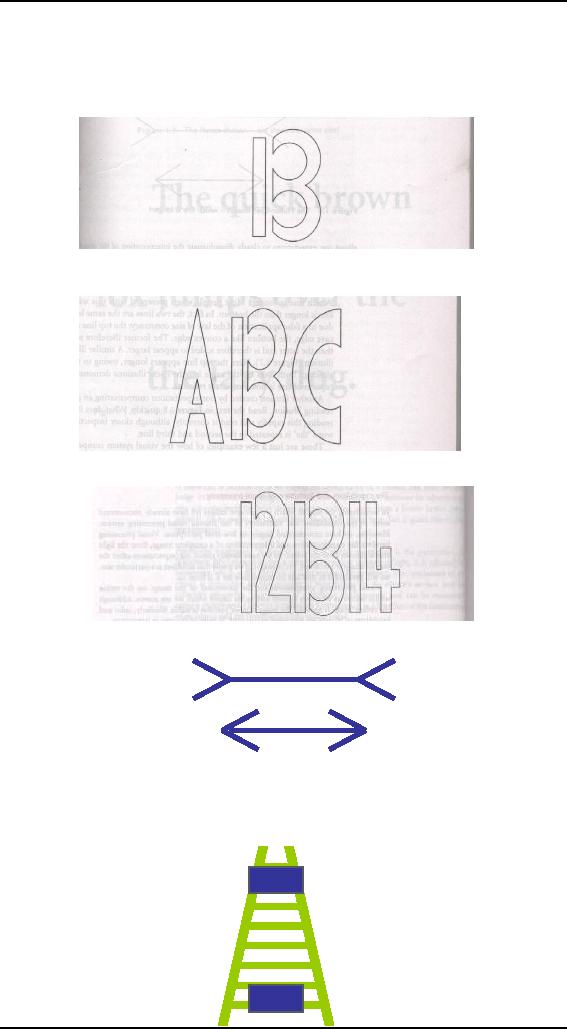

ability to interpret and

exploit our expectations can

be used to resolve

ambiguity.

For

example consider the image

shown in figure `a'. What do

you perceive? Now

60

Human

Computer Interaction

(CS408)

VU

consider

figure `b' and `c'.

the context in which the

object appears allow

our

expectations

to clearly disambiguate the

interpretation of the object, as

either a B or

13.

Figure

a

However,

it can also create optical

illusions. Consider figure

`d'. Which line is

longer?

concave

Figure

c

convex

the Muller

Lyer illusion

Figure

d

A similar

illusion is the Ponzo illusion as

shown in figure

61

Table of Contents:

- RIDDLES FOR THE INFORMATION AGE, ROLE OF HCI

- DEFINITION OF HCI, REASONS OF NON-BRIGHT ASPECTS, SOFTWARE APARTHEID

- AN INDUSTRY IN DENIAL, SUCCESS CRITERIA IN THE NEW ECONOMY

- GOALS & EVOLUTION OF HUMAN COMPUTER INTERACTION

- DISCIPLINE OF HUMAN COMPUTER INTERACTION

- COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS

- HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION

- COLOR THEORY, STEREOPSIS, READING, HEARING, TOUCH, MOVEMENT

- COGNITIVE PROCESS: ATTENTION, MEMORY, REVISED MEMORY MODEL

- COGNITIVE PROCESSES: LEARNING, READING, SPEAKING, LISTENING, PROBLEM SOLVING, PLANNING, REASONING, DECISION-MAKING

- THE PSYCHOLOGY OF ACTIONS: MENTAL MODEL, ERRORS

- DESIGN PRINCIPLES:

- THE COMPUTER: INPUT DEVICES, TEXT ENTRY DEVICES, POSITIONING, POINTING AND DRAWING

- INTERACTION: THE TERMS OF INTERACTION, DONALD NORMAN’S MODEL

- INTERACTION PARADIGMS: THE WIMP INTERFACES, INTERACTION PARADIGMS

- HCI PROCESS AND MODELS

- HCI PROCESS AND METHODOLOGIES: LIFECYCLE MODELS IN HCI

- GOAL-DIRECTED DESIGN METHODOLOGIES: A PROCESS OVERVIEW, TYPES OF USERS

- USER RESEARCH: TYPES OF QUALITATIVE RESEARCH, ETHNOGRAPHIC INTERVIEWS

- USER-CENTERED APPROACH, ETHNOGRAPHY FRAMEWORK

- USER RESEARCH IN DEPTH

- USER MODELING: PERSONAS, GOALS, CONSTRUCTING PERSONAS

- REQUIREMENTS: NARRATIVE AS A DESIGN TOOL, ENVISIONING SOLUTIONS WITH PERSONA-BASED DESIGN

- FRAMEWORK AND REFINEMENTS: DEFINING THE INTERACTION FRAMEWORK, PROTOTYPING

- DESIGN SYNTHESIS: INTERACTION DESIGN PRINCIPLES, PATTERNS, IMPERATIVES

- BEHAVIOR & FORM: SOFTWARE POSTURE, POSTURES FOR THE DESKTOP

- POSTURES FOR THE WEB, WEB PORTALS, POSTURES FOR OTHER PLATFORMS, FLOW AND TRANSPARENCY, ORCHESTRATION

- BEHAVIOR & FORM: ELIMINATING EXCISE, NAVIGATION AND INFLECTION

- EVALUATION PARADIGMS AND TECHNIQUES

- DECIDE: A FRAMEWORK TO GUIDE EVALUATION

- EVALUATION

- EVALUATION: SCENE FROM A MALL, WEB NAVIGATION

- EVALUATION: TRY THE TRUNK TEST

- EVALUATION – PART VI

- THE RELATIONSHIP BETWEEN EVALUATION AND USABILITY

- BEHAVIOR & FORM: UNDERSTANDING UNDO, TYPES AND VARIANTS, INCREMENTAL AND PROCEDURAL ACTIONS

- UNIFIED DOCUMENT MANAGEMENT, CREATING A MILESTONE COPY OF THE DOCUMENT

- DESIGNING LOOK AND FEEL, PRINCIPLES OF VISUAL INTERFACE DESIGN

- PRINCIPLES OF VISUAL INFORMATION DESIGN, USE OF TEXT AND COLOR IN VISUAL INTERFACES

- OBSERVING USER: WHAT AND WHEN HOW TO OBSERVE, DATA COLLECTION

- ASKING USERS: INTERVIEWS, QUESTIONNAIRES, WALKTHROUGHS

- COMMUNICATING USERS: ELIMINATING ERRORS, POSITIVE FEEDBACK, NOTIFYING AND CONFIRMING

- INFORMATION RETRIEVAL: AUDIBLE FEEDBACK, OTHER COMMUNICATION WITH USERS, IMPROVING DATA RETRIEVAL

- EMERGING PARADIGMS, ACCESSIBILITY

- WEARABLE COMPUTING, TANGIBLE BITS, ATTENTIVE ENVIRONMENTS