|

COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS |

| << DISCIPLINE OF HUMAN COMPUTER INTERACTION |

| HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION >> |

Human

Computer Interaction

(CS408)

VU

Lecture

6

Lecture 6.

Cognitive

Frameworks

Learning

Goals

As the

aim of this lecture is to

introduce you the study of

Human Computer

Interaction,

so that after studying this

you will be able to:

Understand

the importance of

Cognition

�

Understand

different cognitive frameworks in

HCI

�

6.1

Introduction

Imagine

trying to drive a car by using

just a computer keyboard.

The four arrow

keys

are used

for steering, the space

bar for braking, and

the return key for

accelerating. To

indicate

left you need to press the

F1 key and to indicate right

the F2 key. To sound

your

horn you need to press the

F3 key. To switch the

headlights on you need to

use

the F4

key and, to switch the

windscreen wipers on, the F5

key. Now imagine as

you

are

driving along a road a ball

is suddenly kicked in front of

you. What would you

do?

Bash the

arrow keys and the

space bar madly while

pressing the F4 key? How

would

rate

your chance of missing the

ball?

Most of

us would bald at the very

idea of driving a car this

way. Many early

video

games,

however, were designed along

these lines: the user

had to press an

arbitrary

combination

of function keys to drive or

navigate through the game.

More recently,

computer

consoles have been designed with

the user's capabilities and

demands of the

activity

in ming. Much better way of

controlling and interacting,

such as through

using

joysticks and steering

wheels, are provided that

map much better onto

the

physical

and cognitive aspects of

driving and

navigating.

We have

to understand the limitations of

the people to ease them.

Let us see what is

cognitive

psychology and how it helps

us.

Cognitive

Psychology

Psychology

is concerned primarily with understanding

human behavior and

the

mental

processes that underlie it.

To account for human behavior,

cognitive

psychology

has adopted the notion of

information processing. Everything we

see, feel,

touch,

taste, smell and do is couched in

terms of information processing.

The

objective

cognitive psychology has been to

characterize these processes in terms

of

their

capabilities and limitations.

For example, one of the

major preoccupations of

cognitive

psychologists in the 1960s

and 1970s was identifying

the amount o f

information

that could be processed and

remembered at any one time.

Recently,

alternative

psychological frameworks have been

sought which more

adequately

characterize

the way people work

with each other and

with the various

artifacts,

including

computers, that they have

use. Cognitive psychology

have attempted to

apply

relevant psychological principles to HCI

by using a variety of

methods,

46

Human

Computer Interaction

(CS408)

VU

including

development of guidelines, the

use of models to predict

human performance

and

the use of empirical methods

for testing computer

systems.

Cognition

The

dominant framework that has

characterized HCI has been cognitive.

Let us define

cognition

first:

Cognition

is what goes on in out heads

when we carry out our

everyday activities.

In

general, cognition refers to the

processes by which we become acquainted

with

things

or, in other words, how we

gain knowledge. These include

understanding,

remembering,

reasoning, attending, being

aware, acquiring skills and

creating new

ideas.

As figure

indicates there are

different kinds of

cognition.

What goes on in

the mind?

perceiving..

thinking..

understanding

others

remembering..

talking with

others

learning..

manipulating

others

planning a

meal

making

decisions

imagining a

trip

solving

problems

painting

daydreaming...

writing

composing

The

main objective in HCI has been to

understand and represent how

human interact

with

computers in term of how knowledge is

transmitted between the two.

The

theoretical

grounding for this approach

stems from cognitive

psychology: it is to

explain

how human beings achieve

the goals they

set.

Cognition

has also been described in terms of

specific kinds of processes.

These

include:

� Attention

� Perception

and recognition

� Memory

� Learning

� Reading,

speaking, and

listening

� Problem

solving, planning, reasoning,

decision-making.

47

Human

Computer Interaction

(CS408)

VU

It is

important to note that many

of these cognitive processes

are interdependent:

several

may be involved for a given

activity. For example, when

you try to learn

material

for an exam, you need to

attend the material,

perceive, and recognize it,

read

it,

think about it, and

try to remember it. Thus

cognition typically involves a range

of

processes.

It is rare for one to occur in

isolation.

6.2

Modes of

Cognition

Norman

(1993) distinguishes between

two general modes:

1.

Experiential cognition

2.

Reflective cognition

Experiential

cognition

It is the

state of mind in which we

perceive, act, and react to events around

us

effectively

and effortlessly. It requires

reaching a certain level of

expertise and

engagement.

Examples include driving a car,

reading a book, having a

conversation,

and

playing a video game.

Reflective

cognition

Reflective

cognition involves thinking,

comparing, and decision-making.

This kind of

cognition

is what leads to new ideas and

creativity. Examples include

designing,

learning,

and writing a book.

Norman

points out that both

modes are essential for everyday

life but that

each

requires

different kinds of technological

support.

Information

processing

One of

the many other approaches to

conceptualizing how the mind

works, has been

to use

metaphors and analogies. A number of

comparisons have been made,

including

conceptualizing

the mind as a reservoir, a

telephone network, and a

digital computer.

One

prevalent metaphor from

cognitive psychology is the

idea that the mind is

an

information

processor.

During

the 1960s and 1970s

the main paradigm in

cognitive psychology was

to

characterize humans as

information processors; everything

that is sensed

(sight,

hearing,

touch, smell, and taste) was

considered to be information, which

the mind

processes.

Information is thought to enter

and exit the mind

through a series of

ordered

processing stages. As shown in figure,

within these stages, various

processes

are

assumed to act upon mental

representations. Processes include

comparing and

matching.

Mental representations are

assumed to comprise images, mental

models,

rules,

and other forms of

knowledge.

Output

Input

Response

Response

Encoding

Comparison

or

or

execution

Selection

response

stimuli

Stage

1

Stage

2

Stage

3

Stage

4

Stage1

encodes information from the

environment into some form

of internal

representation.

In stage 2, the internal

representation of the stimulus is

compared with

memorized

representations that are stored in

the brain. Stage 3 is

concerned with

48

Human

Computer Interaction

(CS408)

VU

deciding

on a response to the encoded stimulus.

When an appropriate match is

made

the

process passes on to stage 4,

which deals with the

organization of the response

and

the

necessary action. The model

assumes that information is

unidirectional and

sequential

and that each of the

stages takes a certain amount of

time, generally

thought

to depend on the complexity of

the operation

performed.

To

illustrate the relationship

between the different stages

of information processing,

consider

the sequence involved in

sending mail. First, letters

are posted in a mailbox.

Next, a

postman empties the letters

from the mailbox and takes

them to central

sorting

office.

Letters are then sorted according to

area and sent via

rail, road, air or ship

to

their

destination. On reaching their

destination, the letters are

further forted into

particular

areas and then into street

locations and so on. A major

aspect of an

information

processing analysis, likewise, is tracing

the mental operations and

their

outcomes

for a particular cognitive

task. For example, let us

carry out an

information

processing

analysis for the cognitive

task of determining the

phone number of a

friend.

Firstly,

you must identify the words

used in the exercise. Then

you must retrieve

their

meaning.

Next you must understand the

meaning of the set of words

given in the

exercise.

The next stage involves

searching your memory for

the solution to the

problem.

When you have retrieved

the number in memory, you

need to generate a

plan

and formulate the answer

into a representation that

can be translated into a

verbal

form.

Then you would need to

recite the digits or write

them down.

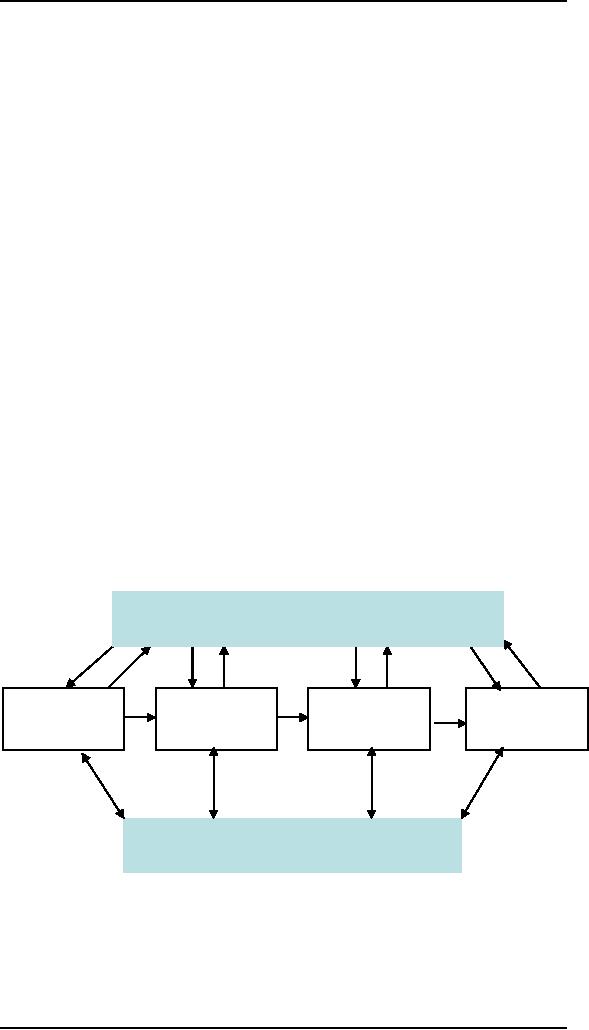

Extending

the human information processing

model

Two

main extensions of the basic

information-processing model are

the inclusion of

the

processes of attention and

memory. Figure shows the

relationship between

the

different

processes. [3]

Attention

Encoding

Comparison

Response

Response

Selection

Execution

Memory

In the

extended model, cognition is

viewed in terms of:

1. how

information is perceptual

processors

2. how

that information is attended

to, and

3. how

that information is processed

and stored in memory.

49

Human

Computer Interaction

(CS408)

VU

6.3

Human

processor model

The

information-processing model provides a

basis from which to make

predictions

about

human performance. Hypotheses

can be made about how long

someone will

take to

perceive and responds to a

stimulus (also known as

reaction time) and

what

bottlenecks

occur if a person is overloaded with

too much information. The

best-

known

approach is

the

human processor model, which

models the cognitive

processes of a user

interacting

with a computer. Based on

the information-processing model,

cognition is

conceptualized

as a series of processing stages where

perceptual, cognitive,

motor

processors

are organized in relation to

one another. The model

predicts which

cognitive

processes are involved when

a user interacts with a

computer, enabling

calculations

to be made how long a user

will take to carry out

various tasks. This

can

be very

useful when comparing

different interfaces. For

example, it has been used

to

compare

how well different word

processors support a range of editing

tasks.

The

information processing approach is based

on modeling mental activities

that

happen

exclusively inside the head.

However, most cognitive activities

involve people

interacting

with external kinds of

representations, like books, documents,

and

computers--not

to mentions one another. For

example, when we go home

from

wherever

we have been we do not need to remember

the details of the route

because

we rely

on cues in the environment

(e.g., we know to turn left

at the red house,

right

when

the road comes to a

T-junction, and so on.).

Similarly, when we are at home

we

do not

have to remember where

everything is because information is

"out there." We

decide

what to eat and drink by

scanning he items in the

fridge, find out whether

any

messages

have been left by glancing at

the answering machine to see

if there is a

flashing

light, and so on.

[2]

6.4

GOMS

Card et

al. have abstracted a further

family of models, known as

GOMS (goals,

operations,

methods and selection rules)

that translate the

qualitative descriptions

into

quantitative

measures. The reason for

developing a family of models is

that it enables

various

qualitative and quantitative

predictions to be made about

user performance.

Goals

These are

the user's goals, describing

what the user wants to

achieve. Further, in

GOMS

the goals are taken to

represent a `memory point' for

the user, from which

he

can

evaluate what should be done

and to which he may return

should any errors

occur.

[1]

Operators

These are

the lowest level of

analysis. They are the basic

actions that the user

must

perform

in order to use the system.

They may affect the

system (e.g., press the

`X'

key) or

only the user's mental

state (e.g., read the

dialogue box). There is

still a

degree of

flexibility about the

granularity of operators; we may

take the command

level

"issue the select command" or be

more primitive; "move mouse

to menu bar,

press

center mouse button...."

[1]

50

Human

Computer Interaction

(CS408)

VU

Methods

As we

have already noted, there

are typically several ways in

which a goal can be

split

into sub goals.

[1]

Selection

Selection

means of choosing between

competing methods [1]

One of

the problems of abstracting a

quantitative model from a

qualitative description

of user

performance is ensuring that

two are connected. In particular, it a

has been

noted

that the form and contents

of GOMS family of models are

relatively unrelated

to the

form and content of the

model human processor and it

also oversimplified

human

behavior. More recently,

attention has focused on

explaining:

Knowledge

Representation Models

�

How

knowledge is represented

Mental

Models

�

How

mental models (these refer

to representation people construct in

their

mind of

themselves, others, objects and

the environment to help them

know

what to

do in current and future

situations) develop and are

used in HCI

User

Interaction Learning

Models

�

How

user learn to interact and

become experienced in using computer

system.

With

respect to applying this

knowledge to HCI design, there

has been considerable

research

in developing:

Conceptual

Models

Conceptual

models are (these are

the various ways in which

systems are understood

by

different people) to help designers

develop appropriate

interfaces.

Interface

Metaphor

Interface

metaphors are (these are GUIs

that consists of electronic

counterparts to

physical

objects in the real world)

to match the knowledge

requirements of users.

6.5

Recent

development in cognitive

psychology

With

the development of computing,

the activity of brain has

been characterized as a

series of

programmed steps using the

computer as a metaphor. Concept

such as

buffers,

memory stores and storage

systems, together with the

type of process that

act

upon

them (such as parallel verses

serial, top-down verses

down-up) provided

psychologist

with a mean of developing more

advanced models of

information

processing,

which was appealing because

such models could be tested.

However,

since the

1980s there has been a more

away from the

information-processing

framework

with in cognitive psychology.

This has occurred in

parallel with the

reduced

importance of the model

human processor with in HCI

and the development

other

theoretical approaches. Primarily, these

are the computational and

the

connectionist

approaches. More recently other

alternative approaches have been

developed

that has situated cognitive

activity in the context in

which they occur.

[3]

51

Human

Computer Interaction

(CS408)

VU

Computational

Approaches

Computational

approaches continue to adopt the

computer metaphor as a

theoretical

framework,

but they no longer adhere to

the information-processing

framework.

Instead,

the emphasis is on modeling

human performance in terms of

what is involved

when

information is processed rather

than when and how

much. Primarily,

computational

models conceptualize the

cognitive system in terms of the

goals,

planning

and action that are

involved in task performance.

These aspects include

modeling:

how information is organized

and classified, how relevant

stored

information

is retrieved, what decisions

are made and how

this information is

reassemble.

Thus tasks are analyzed not

in terms of the amount of

information

processed

per se in the various stages

but in terms of how the

system deals with new

information.

[3]

Connectionist

Approaches

Connectionist

approaches, otherwise known as neural

networks or parallel

distributed

processing,

simulate behavior through

using programming models.

However, they

differ

from conceptual approaches in that

they reject the computer

metaphor as a

theoretical

framework. Instead, they

adopt the brain metaphor, in

which cognition is

represented at

the level of neural networks

consisting of interconnected nodes.

Hence

all

cognitive processes are

viewed as activations of the

nodes in the network and

the

connections

between them rather than

the processing and manipulation

of

information.

[3]

6.6

External

Cognition

External

cognition is concerned with explaining

the cognitive processes

involved

when we

interact with different

external representations. A main

goal is to explicate

the

cognitive benefits of using

different representations for

different cognitive

activities

and the processes involved.

The main one

include:

1.

externalizing to reduce memory

load

2.

computational offloading

3.

annotating and cognitive

tracing.

Externalizing

to reduce memory load

A number

of strategies have been developed for

transforming knowledge into

external

representations

to reduce memory load. One

such strategy is externalizing

things we

find

difficult to remember, such as

birthdays, appointments and

addresses.

Externalizing,

therefore, can help reduce

people's memory burden

by:

� reminding

them to do something (e.g., to

get something for their

mother's

birthday)

� reminding

them of what to do (e.g., to

buy a card)

� reminding

them of when to do something (send it by

a certain date)

Computational

offloading

Computational

offloading occurs when we

use a tool or device in

conjunction with an

external

representation to help us carry

out a computation. An example is

using pen or

paper to

solve a math

problem.[2]

52

Human

Computer Interaction

(CS408)

VU

Annotating

and cognitive tracing

Another

way in which we externalize

our cognitions is by modifying

representations

to

reflect changes that are

taking place that we wish

to mark. For example,

people

oftern

cross thinks off in to-do

list to show that they

have been completed. They

may

also

reorder objects in the

environment, say by creating

different piles as the

nature of

the

work to be done changes.

These two kinds of

modification are called

annotating

and

cognitive tracing:

� Annotating

involves modifying external

representations, such as crossing

off

underlining

items.

� Cognitive

tracing involves externally

manipulating items different orders

or

structures.

Information

Visualization

A general

cognitive principle for

interaction design based on

the external

cognition

approach

is to provide external representations at

the interface that reduce

memory

load

and facilities computational

offloading. Different kinds of

information

visualizations

can be developed that reduce

the amount of effort

required to make

inferences

about a given topic (e.g.,

financial forecasting, identifying

programming

bugs). In

so doing, they can extend or

amplify cognition, allowing

people to perceive

and do

activities tat they couldn't

do otherwise. [2]

6.7

Distributed

cognition

Distributed

cognition is an emerging theoretical

framework whose goal is to

provide

an

explanation that goes beyond

the individual, to conceptualizing

cognitive activities

as

embodied and situated within

the work context in which

they occur. Primarily,

this

involves

describing cognition as it is distributed

across individuals and the

setting in

which it

takes place. The collection of

actors (more generally

referred to just as

`people'

in other parts of the text),

computer systems and other

technology and their

relations

to each other in environmental

setting in which they are

situated are referred

to as

functional systems. The

functional systems that have

been studied include

ship

navigation,

air traffic control,

computer programmer teams

and civil engineering

practices.

A main

goal of the distributed

cognition approach is to analyze

how the different

components

of the functional system are

coordinated. This involves

analyzing how

information

is propagated through the

functional system in terms of

technological

cognitive,

social and organizational

aspects. To achieve this,

the analysis focuses

on

the

way information moves and

transforms between different

representational states

of the

objects in the functional

system and the consequences

of these for subsequent

actions.[3]

References:

[1]

Human Computer Interaction by

Alan Dix

[2]

Interaction Design by Jenny

Preece

[3]

Human Computer Interaction by

Jenny Preece

53

Table of Contents:

- RIDDLES FOR THE INFORMATION AGE, ROLE OF HCI

- DEFINITION OF HCI, REASONS OF NON-BRIGHT ASPECTS, SOFTWARE APARTHEID

- AN INDUSTRY IN DENIAL, SUCCESS CRITERIA IN THE NEW ECONOMY

- GOALS & EVOLUTION OF HUMAN COMPUTER INTERACTION

- DISCIPLINE OF HUMAN COMPUTER INTERACTION

- COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS

- HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION

- COLOR THEORY, STEREOPSIS, READING, HEARING, TOUCH, MOVEMENT

- COGNITIVE PROCESS: ATTENTION, MEMORY, REVISED MEMORY MODEL

- COGNITIVE PROCESSES: LEARNING, READING, SPEAKING, LISTENING, PROBLEM SOLVING, PLANNING, REASONING, DECISION-MAKING

- THE PSYCHOLOGY OF ACTIONS: MENTAL MODEL, ERRORS

- DESIGN PRINCIPLES:

- THE COMPUTER: INPUT DEVICES, TEXT ENTRY DEVICES, POSITIONING, POINTING AND DRAWING

- INTERACTION: THE TERMS OF INTERACTION, DONALD NORMAN’S MODEL

- INTERACTION PARADIGMS: THE WIMP INTERFACES, INTERACTION PARADIGMS

- HCI PROCESS AND MODELS

- HCI PROCESS AND METHODOLOGIES: LIFECYCLE MODELS IN HCI

- GOAL-DIRECTED DESIGN METHODOLOGIES: A PROCESS OVERVIEW, TYPES OF USERS

- USER RESEARCH: TYPES OF QUALITATIVE RESEARCH, ETHNOGRAPHIC INTERVIEWS

- USER-CENTERED APPROACH, ETHNOGRAPHY FRAMEWORK

- USER RESEARCH IN DEPTH

- USER MODELING: PERSONAS, GOALS, CONSTRUCTING PERSONAS

- REQUIREMENTS: NARRATIVE AS A DESIGN TOOL, ENVISIONING SOLUTIONS WITH PERSONA-BASED DESIGN

- FRAMEWORK AND REFINEMENTS: DEFINING THE INTERACTION FRAMEWORK, PROTOTYPING

- DESIGN SYNTHESIS: INTERACTION DESIGN PRINCIPLES, PATTERNS, IMPERATIVES

- BEHAVIOR & FORM: SOFTWARE POSTURE, POSTURES FOR THE DESKTOP

- POSTURES FOR THE WEB, WEB PORTALS, POSTURES FOR OTHER PLATFORMS, FLOW AND TRANSPARENCY, ORCHESTRATION

- BEHAVIOR & FORM: ELIMINATING EXCISE, NAVIGATION AND INFLECTION

- EVALUATION PARADIGMS AND TECHNIQUES

- DECIDE: A FRAMEWORK TO GUIDE EVALUATION

- EVALUATION

- EVALUATION: SCENE FROM A MALL, WEB NAVIGATION

- EVALUATION: TRY THE TRUNK TEST

- EVALUATION – PART VI

- THE RELATIONSHIP BETWEEN EVALUATION AND USABILITY

- BEHAVIOR & FORM: UNDERSTANDING UNDO, TYPES AND VARIANTS, INCREMENTAL AND PROCEDURAL ACTIONS

- UNIFIED DOCUMENT MANAGEMENT, CREATING A MILESTONE COPY OF THE DOCUMENT

- DESIGNING LOOK AND FEEL, PRINCIPLES OF VISUAL INTERFACE DESIGN

- PRINCIPLES OF VISUAL INFORMATION DESIGN, USE OF TEXT AND COLOR IN VISUAL INTERFACES

- OBSERVING USER: WHAT AND WHEN HOW TO OBSERVE, DATA COLLECTION

- ASKING USERS: INTERVIEWS, QUESTIONNAIRES, WALKTHROUGHS

- COMMUNICATING USERS: ELIMINATING ERRORS, POSITIVE FEEDBACK, NOTIFYING AND CONFIRMING

- INFORMATION RETRIEVAL: AUDIBLE FEEDBACK, OTHER COMMUNICATION WITH USERS, IMPROVING DATA RETRIEVAL

- EMERGING PARADIGMS, ACCESSIBILITY

- WEARABLE COMPUTING, TANGIBLE BITS, ATTENTIVE ENVIRONMENTS