|

WEARABLE COMPUTING, TANGIBLE BITS, ATTENTIVE ENVIRONMENTS |

| << EMERGING PARADIGMS, ACCESSIBILITY |

Human

Computer Interaction

(CS408)

VU

Lecture

45

Lecture

45. Conclusion

Learning

Goals

As the

aim of this lecture is to

introduce you the study of

Human Computer

Interaction,

so that after studying this

you will be able to:

� Understand

the new and emerging

interaction paradigms

The most

common interaction paradigm in

use today is the desktop

computing

paradigm.

However, there are many

other different types of

interaction paradigms.

Some of

these are given below

and many are still

emerging:

Ubiquitous

computing

Ubiquitous

computing (ubicomp, or sometimes

ubiqcomp) integrates

computation

into

the environment, rather than

having computers which are

distinct objects.

Other

terms for

ubiquitous computing include

pervasive computing, calm

technology, and

things

that think. Promoters of this

idea hope that embedding

computation into the

environment

and everyday objects would

enable people to move around

and interact

with

information and computing

more naturally and casually

than they currently

do.

One of

the goals of ubiquitous

computing is to enable devices to

sense changes in

their

environment and to automatically

adapt and act based on these

changes based on

user

needs and preferences.

The

late Mark Weiser wrote

what are considered some of

the seminal papers in

Ubiquitous

Computing beginning in 1988 at

the Xerox Palo Alto

Research Center

(PARC).

Weiser was influenced in a

small way by the dystopian

Philip K. Dick novel

Ubik,

which envisioned a future in

which everything -- from

doorknobs to toilet-paper

holders,

were intelligent and

connected. Currently, the

art is not as mature as

Weiser

hoped,

but a considerable amount of

development is taking

place.

The MIT

Media Lab has also

carried on significant research in

this field, which

they

call

Things That Think.

American

writer Adam Greenfield

coined the term Everyware to

describe

technologies

of ubiquitous computing, pervasive

computing, ambient informatics

and

tangible

media. The article All

watched over by machines of loving

grace contains the

first

use of the term. Greenfield

also used the term as

the title of his book

Everyware:

The

Dawning Age of Ubiquitous

Computing (ISBN

0321384016).

Early

work in Ubiquitous Computing

The

initial incarnation of ubiquitous

computing was in the form of

"tabs", "pads", and

"boards"

built at Xerox PARC,

1988-1994. Several papers describe

this work, and

there

are web pages for

the Tabs and for the

Boards (which are a commercial

product

now):

Ubicomp

helped kick off the recent

boom in mobile computing

research, although it is

not

the same thing as mobile

computing, nor a superset

nor a subset.

437

Human

Computer Interaction

(CS408)

VU

Ubiquitous

Computing has roots in many

aspects of computing. In its

current form, it

was

first articulated by Mark

Weiser in 1988 at the

Computer Science Lab at

Xerox

PARC. He

describes it like

this:

Ubiquitous

Computing #1

Inspired

by the social scientists, philosophers,

and anthropologists at PARC, we

have

been

trying to take a radical

look at what computing and

networking ought to be

like.

We

believe that people live

through their practices and

tacit knowledge so that

the

most

powerful things are those

that are effectively

invisible in use. This is a

challenge

that

affects all of computer

science. Our preliminary

approach: Activate the

world.

Provide

hundreds of wireless computing

devices per person per

office, of all scales

(from 1"

displays to wall sized).

This has required new

work in operating

systems,

user

interfaces, networks, wireless,

displays, and many other

areas. We call our

work

"ubiquitous

computing". This is different

from PDA's, dynabooks, or

information at

your

fingertips. It is invisible, everywhere

computing that does not

live on a personal

device of

any sort, but is in the

woodwork everywhere.

Ubiquitous

Computing #2

For

thirty years most interface design,

and most computer design,

has been headed

down

the path of the "dramatic"

machine. Its highest ideal

is to make a computer so

exciting,

so wonderful, so interesting, that we

never want to be without it.

A less-

traveled

path I call the "invisible";

its highest ideal is to make

a computer so

imbedded,

so fitting, so natural, that we

use it without even thinking

about it. (I have

also

called this notion

"Ubiquitous Computing", and

have placed its origins in

post-

modernism.)

I believe that in the next

twenty years the second path

will come to

dominate.

But this will not be easy;

very little of our current

systems infrastructure

will

survive. We have been building

versions of the infrastructure-to-come at

PARC

for

the past four years, in the

form of inch-, foot-, and

yard-sized computers we call

Tabs,

Pads, and Boards. Our

prototypes have sometimes succeeded,

but more often

failed to

be invisible. From what we

have learned, we are now

explorting some new

directions

for ubicomp, including the

famous "dangling string"

display.

Wearable

Computing

45.1

Personal

Computers have never quite

lived up to their name. There is a

limitation to

the

interaction between a user

and a personal computer.

Wearable computers break

this

boundary. As the name suggests

these computers are worn on

the body like a

piece of

clothing. Wearable computers have been

applied to areas such as

behavioral

modeling,

health monitoring systems,

information technologies and

media

development.

Government organizations, military,

and health professionals

have all

incorporated

wearable computers into their

daily operations. Wearable computers

are

especially

useful for applications that

require computational support

while the user's

hands,

voice, eyes or attention are

actively engaged with the

physical environment.

Wristwatch

videoconferencing system running GNU

Linux, later featured in

Linux

Journal

and presented at ISSCC2000One of the

main features of a wearable

computer

is constancy.

There is a constant interaction between

the computer and user,

ie. there

is no need to

turn the device on or off.

Another feature is the

ability to multi-task. It is

not

necessary to stop what you

are doing to use the

device; it is augmented into

all

other

actions. These devices can

be incorporated by the user to act

like a prosthetic. It

can

therefore be an extension of the user's

mind and/or body.

438

Human

Computer Interaction

(CS408)

VU

Such

devices look far different

from the traditional cyborg

image of wearable

computers,

but in fact these devices

are becoming more powerful

and more wearable

all

the time. The most extensive

military program in the wearables arena

is the US

Army's

Land Warrior system, which will

eventually be merged into

the Future Force

Warrior

system.

Issues

Since

the beginning of time man

has fought man. The

difference between the

18th

century

and the 21st century

however, is that we are no

longer fighting with guns

but

instead

with information. One of the most

powerful devices in the past

few decades is

the

computer and the ability to

use the information

capabilities of such a device

have

transformed

it into a weapon.

Wearable

computers have led to an increase in

micro-management. That is, a

society

characterized

by total surveillance and a

greater influence of media

and technologies.

Surveillance

has impacted more personal

aspects of our daily lives

and has been used

to punish

civilians for seemingly

petty crimes. There is a

concern that this

increased

used of

cameras has affected more

personal and private moments in

our lives as a

form of

social control.

History

Depending

on how broadly one defines

both wearable and computer,

the first

wearable

computer could be as early as

the 1500s with the invention

of the pocket

watch or

even the 1200s with

the invention of eyeglasses.

The first device that

would

fit the

modern-day image of a wearable

computer was constructed in

1961 by the

mathematician

Edward O. Thorp, better

known as the inventor of the

theory of card-

counting

for blackjack, and Claude E.

Shannon, who is best known

as "the father of

information

theory." The system was a

concealed cigarette-pack sized

analog

computer

designed to predict roulette

wheels. A data-taker would

use microswitches

hidden in

his shoes to indicate the

speed of the roulette wheel,

and the computer

would

indicate an octant to bet on by

sending musical tones via

radio to a miniature

speaker

hidden in a collaborators ear

canal. The system was

successfully tested in Las

Vegas in

June 1961, but hardware

issues with the speaker

wires prevented them

from

using it

beyond their test runs.

Their wearable was kept

secret until it was

first

mentioned

in Thorp's book Beat the

Dealer (revised ed.) in 1966

and later published

in detail

in 1969. The 1970s saw

rise to similar roulette-prediction

wearable

computers

using next-generation technology, in

particular a group known

as

Eudaemonic

Enterprises that used a CMOS

6502 microprocessor with 5K RAM

to

create a

shoe-computer with inductive

radio communications between a

data-taker

and

better (Bass 1985).

In 1967,

Hubert Upton developed an

analogue wearable computer

that included an

eyeglass-mounted

display to aid lip reading.

Using high and low-pass

filters, the

system

would determine if a spoken

phoneme was a fricative, stop

consonant, voiced-

fricative,

voiced stop consonant, or simply

voiced. An LED mounted on

ordinary

eyeglasses

illuminated to indicate the

phoneme type. The 1980s

saw the rise of more

general-purpose

wearable computers. In 1981

Steve Mann designed and

built a

backpack-mounted

6502-based computer to control

flash-bulbs, cameras and

other

photographic

systems. Mann went on to be an

early and active researcher

in the

wearables

field, especially known for

his 1994 creation of the

Wearable Wireless

Webcam

(Mann 1997). In 1989

Reflection Technology marketed

the Private Eye

439

Human

Computer Interaction

(CS408)

VU

head-mounted

display, which scanned a

vertical array of LEDs

across the visual

field

using a

vibrating mirror. 1993 also

saw Columbia University's

augmented-reality

system

known as KARMA: Knowledge-based Augmented

Reality for

Maintenance

Assistance.

Users would wear a Private

Eye display over one

eye, giving an

overlay

effect

when the real world

was viewed with both

eyes open. KARMA would

overlay

wireframe

schematics and maintenance

instructions on top of whatever

was being

repaired.

For example, graphical

wireframes on top of a laser printer

would explain

how to

change the paper tray. The

system used sensors attached

to objects in the

physical

world to determine their

locations, and the entire

system ran tethered from

a

desktop

computer (Feiner

1993).

The

commercialization of general-purpose

wearable computers, as led by

companies

such as

Xybernaut, CDI and ViA Inc,

has thus far met

with limited success.

Publicly-

traded

Xybernaut tried forging

alliances with companies such as IBM

and Sony in

order to

make wearable computing

widely available, but in

2005 their stock

was

delisted

and the company filed

for Chapter 11 bankruptcy

protection amid

financial

scandal

and federal investigation. In

1998 Seiko marketed the

Ruputer, a computer in

a (fairly

large) wristwatch, to mediocre

returns. In 2001 IBM developed

and publicly

displayed

two prototypes for a

wristwatch computer running

Linux, but the

product

never

came to market. In 2002

Fossil, Inc. announced the

Fossil WristPDA, which

ran

the

Palm OS. Its release date

was set for summer of 2003,

but was delayed

several

times

and was finally made

available on January 5,

2005.

Tangible

Bits

45.2

The

development from desktop to

physical environment can be

divided into two

phases:

the first one was

introduced by the Xerox Star

workstation in 1981.

This

workstation

was the first generation of

a graphical user interface

that sets up a

"desktop

metaphor". It simulates a real

physical desktop on a computer

screen with a

mouse,

windows and icons. The

Xerox Star workstation also

establishes some

important

HCI design principles like

"seeing and

pointing".

Ten years

later, in 1991, Marc Weiser

illustrates a different paradigm of

computing,

called

"ubiquitous computing". His

vision contains the

displacement of computers

into

the background and the

attempt to make them

invisible.

A new

paradigm desires to start the

next period of computing by

establish a new type

of HCI

called "Tangible User

Interfaces" (TUIs). Herewith

they try to make

computing

truly ubiquitous and

invisible. TUIs will change the

world itself to an

interface

(see figure below) with

the intention that all

surfaces (walls,

ceilings,

doors...)

and objects in the room will

be an interface between the

user and his

environment.

440

Human

Computer Interaction

(CS408)

VU

Below

are some examples:

The

ClearBoard (TMG, 1990-95)

has the idea of changing a

passive wall to an active

dynamic

collaboration medium. This leads to

the vision, that all

surfaces become

active

surfaces through which

people can interact with

other (real and virtual)

spaces

(see

figure below).

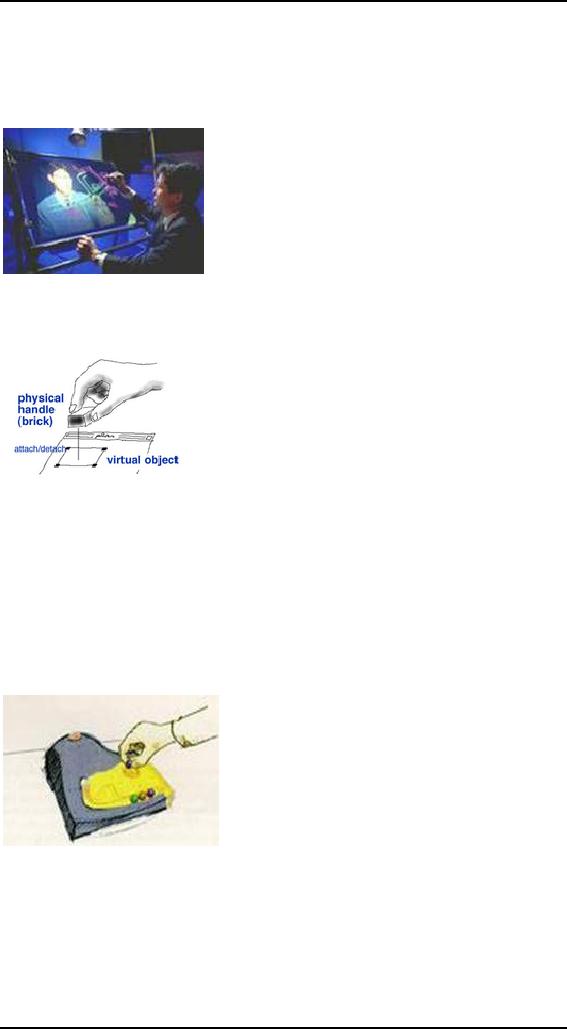

Bricks

(TMG, 1990-95) is a graphical

user interface that allows

direct control of

virtual

objects through handles

called "Bricks". These

Bricks can be attached

to

virtual

objects and thus make

them graspable. This project

encouraged two-handed

direct

manipulation of physical objects

(see figure below).

The

Marble Answering Machine (by

Durell Bishop, student at

the Royal College of

Art) is a

prototype telephone answering

machine. Incoming voice

messages are

represented by

marbles, the user can

grasp and then drop to

play the message or

dial

the

caller automatically. It shows

that computing doesn't have

to take place at a desk,

but it

can be integrated into

everyday objects. The Marble

Answering Machine

demonstrates

the great potential of

making digital information graspable

(see figure

below).

Goals

and Concepts of "tangible

bits"

In our

world there exist two

realms: the physical

environment of atoms and

the

cyberspace of

bits. Interactions between

these two spheres are

mostly restricted to

GUI-

based boxes and as a result

separated from ordinary

physical environments. All

senses,

work practices and skills

for processing information we have

developed in the

past are

often neglected by our

current HCI designs.

Goals

441

Human

Computer Interaction

(CS408)

VU

So there

is a need to augment the real

physical world by coupling

digital information

to

everyday things. In this

way, they bring the

two worlds, cyberspace and

real world,

together

by making digital information

tangible. All states of physical

matter, that

means,

not only solid matter,

but also liquids and

gases become interfaces

between

people

and cyberspace. It intends to allow

users both to "grasp and

manipulate"

foreground

bits and be aware of

background bits. They also

don't want to have a

distinction

between special input and

output devices any longer,

e.g. between

representation

and control. Nowadays,

interaction devices are divided

into input

devices

like mice or keyboards and

output devices like screens.

Another goal is not

to

have a

one-to-one mapping between

physical objects and digital

information, but an

aggregation

of several digital information

instead.

Concepts

To

achieve these goals, they

worked out three key

concepts: "interactive surfaces",

"coupling

bits and atoms" and

"ambient media". The concept

"interactive surfaces"

suggests

a transformation of each surface (walls,

ceilings, doors, desktops)

into an

active

interface between physical

and virtual world. The

concept "coupling bits

and

atoms"

stands for the seamless

coupling of everyday objects

(card, books, and

toys)

with

digital information. The

concept "ambient media"

implies the use of sound,

light,

air

flow, water movement for

background interfaces at the

periphery of human

perception.

45.3

Attentive

Environments

Attentive

environments are environments

that are user and

context aware. One

project

which

explores these themes is

IBM's BlueEyes research

project is chartered to

explore

and define attentive

environments.

Animal

survival depends on highly developed

sensory abilities. Likewise,

human

cognition

depends on highly developed abilities to

perceive, integrate, and

interpret

visual,

auditory, and touch

information. Without a doubt, computers

would be much

more

powerful if they had even a

small fraction of the

perceptual ability of animals

or

humans.

Adding such perceptual

abilities to computers would enable

computers and

humans to

work together more as

partners. Toward this end,

the BlueEyes project

aims at

creating computational devices

with the sort of perceptual

abilities that people

take

for granted.

How

can we make computers "see"

and "feel"?

BlueEyes

uses sensing technology to identify a

user's actions and to

extract key

information.

This information is then

analyzed to determine the

user's physical,

emotional,

or informational state, which in

turn can be used to help

make the user

more

productive by performing expected

actions or by providing

expected

information.

For example, a BlueEyes-enabled

television could become active

when

the

user makes eye contact, at

which point the user

could then tell the

television to

"turn

on".

In the

future, ordinary household

devices -- such as televisions,

refrigerators, and

ovens --

may be able to do their jobs

when we look at them and

speak to them.

442

Table of Contents:

- RIDDLES FOR THE INFORMATION AGE, ROLE OF HCI

- DEFINITION OF HCI, REASONS OF NON-BRIGHT ASPECTS, SOFTWARE APARTHEID

- AN INDUSTRY IN DENIAL, SUCCESS CRITERIA IN THE NEW ECONOMY

- GOALS & EVOLUTION OF HUMAN COMPUTER INTERACTION

- DISCIPLINE OF HUMAN COMPUTER INTERACTION

- COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS

- HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION

- COLOR THEORY, STEREOPSIS, READING, HEARING, TOUCH, MOVEMENT

- COGNITIVE PROCESS: ATTENTION, MEMORY, REVISED MEMORY MODEL

- COGNITIVE PROCESSES: LEARNING, READING, SPEAKING, LISTENING, PROBLEM SOLVING, PLANNING, REASONING, DECISION-MAKING

- THE PSYCHOLOGY OF ACTIONS: MENTAL MODEL, ERRORS

- DESIGN PRINCIPLES:

- THE COMPUTER: INPUT DEVICES, TEXT ENTRY DEVICES, POSITIONING, POINTING AND DRAWING

- INTERACTION: THE TERMS OF INTERACTION, DONALD NORMAN’S MODEL

- INTERACTION PARADIGMS: THE WIMP INTERFACES, INTERACTION PARADIGMS

- HCI PROCESS AND MODELS

- HCI PROCESS AND METHODOLOGIES: LIFECYCLE MODELS IN HCI

- GOAL-DIRECTED DESIGN METHODOLOGIES: A PROCESS OVERVIEW, TYPES OF USERS

- USER RESEARCH: TYPES OF QUALITATIVE RESEARCH, ETHNOGRAPHIC INTERVIEWS

- USER-CENTERED APPROACH, ETHNOGRAPHY FRAMEWORK

- USER RESEARCH IN DEPTH

- USER MODELING: PERSONAS, GOALS, CONSTRUCTING PERSONAS

- REQUIREMENTS: NARRATIVE AS A DESIGN TOOL, ENVISIONING SOLUTIONS WITH PERSONA-BASED DESIGN

- FRAMEWORK AND REFINEMENTS: DEFINING THE INTERACTION FRAMEWORK, PROTOTYPING

- DESIGN SYNTHESIS: INTERACTION DESIGN PRINCIPLES, PATTERNS, IMPERATIVES

- BEHAVIOR & FORM: SOFTWARE POSTURE, POSTURES FOR THE DESKTOP

- POSTURES FOR THE WEB, WEB PORTALS, POSTURES FOR OTHER PLATFORMS, FLOW AND TRANSPARENCY, ORCHESTRATION

- BEHAVIOR & FORM: ELIMINATING EXCISE, NAVIGATION AND INFLECTION

- EVALUATION PARADIGMS AND TECHNIQUES

- DECIDE: A FRAMEWORK TO GUIDE EVALUATION

- EVALUATION

- EVALUATION: SCENE FROM A MALL, WEB NAVIGATION

- EVALUATION: TRY THE TRUNK TEST

- EVALUATION – PART VI

- THE RELATIONSHIP BETWEEN EVALUATION AND USABILITY

- BEHAVIOR & FORM: UNDERSTANDING UNDO, TYPES AND VARIANTS, INCREMENTAL AND PROCEDURAL ACTIONS

- UNIFIED DOCUMENT MANAGEMENT, CREATING A MILESTONE COPY OF THE DOCUMENT

- DESIGNING LOOK AND FEEL, PRINCIPLES OF VISUAL INTERFACE DESIGN

- PRINCIPLES OF VISUAL INFORMATION DESIGN, USE OF TEXT AND COLOR IN VISUAL INTERFACES

- OBSERVING USER: WHAT AND WHEN HOW TO OBSERVE, DATA COLLECTION

- ASKING USERS: INTERVIEWS, QUESTIONNAIRES, WALKTHROUGHS

- COMMUNICATING USERS: ELIMINATING ERRORS, POSITIVE FEEDBACK, NOTIFYING AND CONFIRMING

- INFORMATION RETRIEVAL: AUDIBLE FEEDBACK, OTHER COMMUNICATION WITH USERS, IMPROVING DATA RETRIEVAL

- EMERGING PARADIGMS, ACCESSIBILITY

- WEARABLE COMPUTING, TANGIBLE BITS, ATTENTIVE ENVIRONMENTS