|

THE PSYCHOLOGY OF ACTIONS: MENTAL MODEL, ERRORS |

| << COGNITIVE PROCESSES: LEARNING, READING, SPEAKING, LISTENING, PROBLEM SOLVING, PLANNING, REASONING, DECISION-MAKING |

| DESIGN PRINCIPLES: >> |

Human

Computer Interaction

(CS408)

VU

Lecture

11

Lecture

11. The

Psychology of Actions

Learning

Goals

As the

aim of this lecture is to

introduce you the study of

Human Computer

Interaction,

so that after studying this

you will be able to:

Understand

mental models

�

Understand

psychology of actions

�

Discuss

errors.

�

Mental

model

11.1

The

concept of mental model has

manifested itself in psychology

theorizing and HCI

research

in a multitude of ways. It is difficult

to provide a definitive

description,

because

different assumption and

constraints are brought to

bear on the different

phenomena

it has been used to explain.

A well-known definition, in the

context of

HCI, is

provided by Donald Norman:

`the model people have of

themselves, others,

the

environment, and the things

with which they interact.

People form mental

models

through

experience, training and

instruction'.

It should

be noted that in fact the

term mental model was

first developed in the

early

1640s by

Kenneth Craik. He proposed

that thinking `...models, or

parallels reality':

`If the

organism carries a "small-scale model" of

external reality and of its

own

possible

actions within its head, it is

able to try out various

alternatives, conclude

which is

the best of them, react to

future situations before

they arise, utilize

the

knowledge

of past events in dealing with

the present and future, and

in every way to

react in a

much fuller, safer, and more

competent manner to emergencies witch

face

it.'

Just as

an engineer will build scale

models of a bridge, in order to

test out certain

stresses

prior to building the real

thing, so, too, do we build

mental models of the

world in

order to make predictions

about an external event

before carrying out

an

action?

Although our construction

and use of mental models

may not be as

extensive

or as

complete as Craik's hypothesis

suggests, it is likely that most of us

can probably

recall

using a form of mental

simulation at some time or

other. An important

observation

of these types of mental

models is that they are

invariably incomplete,

unstable,

and easily confusable and

are often based on

superstition rather

than

scientific

fact.

Within

cognitive psychology the

term mental model has

since been explicated by

Johnson-Laird

(1983, 1988) with respect to

its structure and function

in human

reasoning

and language understanding. In terms of

structure of mental models,

he

argues

that mental models are

either analogical representations or a

combination of

analogical

and prepositional representations.

They are distinct from,

but related to

images. A

mental model represents the

relative position of a set of

objects in an

94

Human

Computer Interaction

(CS408)

VU

analogical

manner that parallels the

structure of the state of

objects in the world.

An

image

also does this, but more

specifically in terms of view of a

particular model.

An

important difference between images

and mental models is in

terms of their

function.

Mental models are usually

constructed when we are

required to make an

inference

or a prediction about a particular

state of affairs. In constructing

the mental

model a

conscious mental simulation may be

`run' from which conclusions

about the

predicted

state of affairs can be

deduced. An image, on the

other hand, is

considered

to be a

one-off representation. A simplified

analogy is to consider an image to be

like

a frame

in a movie while a mental

model is more like a short

snippet of a movie.

So, after

this discussion we can say

that while learning and

using a system, people

develop

knowledge of how to use the

system and, to a lesser

extent, how the

system

works.

These two kinds of knowledge

are often referred to as a user's

mental model.

Having

developed a mental model of an

interactive product, it is assumed

that people

will use

it to make inferences about

how to carry out tasks

when using the

interactive

product.

Mental models are also

used to fathom what to do

when something

unexpected

happens with a system and

when encountering unfamiliar

systems. The

more

someone learns about a

system and how it functions,

the more their

mental

model

develops. For example, TV

engineers have a deep mental

model of how TVs

work

that allows them to work

out how to fix them. In

contrast, an average citizen

is

likely to

have a reasonably good

mental model of how to

operate a TV but a

shallow

mental

model of how it

worked.

To

illustrate how we use mental

models in our everyday

reasoning, imagine

the

following

scenario:

� You

arrive home from a holiday

on a cold winter's night to a

cold house. You

have

small baby and you need to

get the house warm as

quickly as possible.

Your

house is centrally heated. Do

you set the thermostat as

high as possible

or turn

it to the desired temperature

(e.g., 70F)

Most

people when asked the

questions imagine the scenario in terms

of what they

would do

in their own house they

choose the first option.

When asked why, a

typical

explanation

that is given is that

setting the temperature to be as

high as possible

increases

the rate at which the

room warms up. While many

people may believe

this,

it is

incorrect.

There

are two commonly held

folk theories about thermostats:

the timer theory

and

the

valve theory. The timer

theory proposes that the

thermostat simply controls

the

relative

proportion of time that the

device stays on. Set the

thermostat midway,

and

the

device is on about half the

time; set it all the

way up and the device is on

all the

time;

hence, to heat or cool something most

quickly, set the thermostat

so that the

device is

on all the time. The

valve theory proposes that

the thermostat controls

how

much heat

comes out of the device.

Turn the thermostat all

the way up, and

you get

maximum

heating or cooling.

Thermostats

work by switching on the heat

and keeping it going at a constant

speed

until

the desired temperature set

is reached, at which point they

cut out. They

cannot

control

the rate at which heat is

given out from a heating

system. Left a given

setting,

thermostats

will turn the heat on an off

as necessary to maintain the

desired

temperature.

It treats the heater, oven,

and air conditioner as

all-or-nothing devices

that

can be either fully on or

fully off, with no

in-between states. The

thermostat turns

the

heater, oven, or air

conditioner completely on--at

full power--until the

temperature

setting on the thermostat is reached.

Then it turns the unit

completely off.

95

Human

Computer Interaction

(CS408)

VU

Setting

the thermostat at one

extreme cannot affect how

long it takes to reach the

desired

temperature.

The

real point of the example is

not that some people

have erroneous theories; it

is

that

everyone forms theories

(mental models) to explain

what they have observed.

In

the

case of the thermostat the

design gives absolutely no

hint as to the correct

answer.

In the

absence of external information,

people are free to let

their imaginations

run

free as

long as the mental models

they develop account for the

facts as they perceive

them.

Why do

people use erroneous mental

models?

It seems

that in the above scenario,

they are running a mental

model based on

general

valve

theory of the way something

works. This assumes the

underlying principle of

"more is

more": the more you

turn or push something, the

more it causes the

desired

effect.

This principle holds for a

range of physical devices,

such as taps and

radio

controls,

where the more you

turn them, the more

water or volume is given.

However,

it does

not hold for thermostats,

which instead function based

on the principle of an

on-off

switch. What seems to happen

is that in everyday life

people develop a core

set

of

abstractions about how

things work, and apply

these to a range of

devices,

irrespective

of whether they are

appropriate.

Using

incorrect mental models to

guide behavior is surprisingly

common. Just watch

people at

a pedestrian crossing or waiting for an

elevator (lift). How many

times do

they

press the button? A lot of

people will press it at least twice.

When asked why, a

common

reason given is that they

think it will make it lights change

faster or ensure

the

elevator arrives. This seems

to do another example of following

the "more is

more"

philosophy: it is believed that

the more times you

press the button, the

more

likely it

is to result in he desire

effect.

Another

common example of an erroneous

mental model is what people

do when the

cursor

freeze on their computer screen.

Most people will bash away

at all manner of

keys in

the vain hope that

this will make it work

again. However, ask them

how this

will help

and their explanations are

rather vague. The same is

true when the TV

starts

acting

up: a typical response is to

hit the top of the

box repeatedly with a bare

hand or

a

rolled-up newspaper. Again, as people

why and their reasoning

about how this

behavior

will help solve the problem

is rather lacking.

Indeed,

research has shown that

people's mental models of

the way interactive

devices

work is poor, often being

incomplete, easily confusable,

based on

inappropriate

analogies, and superstition.

Not having appropriate

mental models

available

to guide their behavior is

what caused people to become

very frustrate--

often

resulting is stereotypical "venting'

behavior like those

described above.

On the

other hand, if people could

develop better mental models

of interactive

systems,

they would be in a better

position to know how to

carry out their

tasks

efficiently

and what to do if the system

started acting up. Ideally,

they should be able

to

develop a mental model that

matches the conceptual;

modal developed by

the

designer.

But how can you

help users to accomplish

this? One suggestion is to

educate

them better, however, many

people are resistant to spending

much time

learning

about how things work,

especially if it involves reading manuals

and other

documentation.

An alternative proposal is to design

systems to be more

transparent,

so that

they are easier to

understand.

People do

tend to find causes for

events, and just what

they assign as the cause

varies.

In part

people tend to assign a causal

relation whenever two things

occur in

96

Human

Computer Interaction

(CS408)

VU

succession.

If I do some action A just

prior to some result R, then

I conclude that A

must have

caused R, even if, there

really was no relationship

between the two.

Self-blaming

Suppose I

try to use an everyday

thing, but I can't: where is

the fault, in my action

or

in the

thing? We are apt to blame

ourselves. If we believe that others

are able to use

the

device and if we believe

that it is not very complex,

then we conclude that

any

difficulties

must be our own fault.

Suppose the fault really

lies in the device, so

that

lots of

people have the same

problems. Because everyone

perceives the fault to be

his

or own,

nobody wants to admit to

having trouble. This creates

a conspiracy of silence,

maintaining

the feeling of guilt and

helplessness among

users.

Interestingly

enough, the common tendency

to blame ourselves for

failures with

everyday

objects goes against the

normal attributions people

make. In general, it

has

been

found that normal attribute

their own problems to the

environment, those of

other

people to their

personalities.

It seems

natural for people to blame

their own misfortunes on the

environment. It

seems

equally natural to blame

other people's misfortunes on

their personalities.

Just

the

opposite attribution, by the

way, is made when things go

well. When things go

right,

people credit their own

forceful personalities and

intelligence. The onlookers

do

the

reverse. When they see

things go well for someone

else, they credit the

environment.

In all

cases, whether a person is

inappropriately accepting blame

for the inability to

work

simple objects or attributing

behavior to environment or personality, a

faulty

mental

model is at work.

Reason

for self-blaming

Learned

helplessness

The

phenomenon called learned

helplessness might help

explain the self-blame.

It

refers to

the situation in which

people experience failure at a

task, often numerous

times. As

a result, they decide that

the task cannot be done, at

least not by them:

they

are

helpless. They stop trying. If

this feeling covers a group of

tasks, the result can

be

severe

difficulties coping with

life. In the extreme case,

such learned

helplessness

leads to depression

and to a belief that the

person cannot cope with everyday

life at

all.

Some times all that it takes

to get such a feeling of

helplessness is a few

experiences

that accidentally turn out

bad. The phenomenon has been

most frequently

studied

as a precursor to the clinical

problem of depression, but it might

easily arise

with a

few bad experiences with

everyday life.

Taught

helplessness

Do the

common technology and mathematics

phobias results from a kind of

learned

helplessness?

Could a few instances of failure in

what appear to be straightforward

situations

generalize to every technological

object, every mathematics

problem?

Perhaps.

In fact, the design of

everyday things seems almost

guaranteed to cause

this.

We could

call this phenomenon taught

helplessness.

With

badly designed objects--constructed so as

to lead to

misunderstanding--faulty

mental

models, and poor feedback,

no wonder people feel guilty

when they have

trouble

using objects, especially

when they perceive that

nobody else is having

the

97

Human

Computer Interaction

(CS408)

VU

same

problems. The problem is

that once failure starts, it soon

generalizes by self-

blame to

all technology. The vicious

cycle starts: if you fail at

something, you think

it

is your

fault. Therefore you think

you can't do that task. As a

result, next time

you

have to

do the task, you believe

you can't so you don't

even try. The result is

that you

can't,

just as you thought. You are

trapped in a self-fulfilling

prophecy.

The

nature of human thought and

explanation

It isn't

always easy to tell just

where the blame for

problem should be placed.

A

number of

dramatic accidents have come

about, in part, from the

false assessment of

blame in

a situation. Highly skilled,

well-trained people are

using complex

equipment

when

suddenly something goes

wrong. They have to figure

out what the problem

is.

Most

industrial equipment is pretty

reliable. When the

instruments indicate

that

something

is wrong, one has to

consider the possibility

that the instruments

themselves are

wrong. Often this is the

correct assessment. When

operators

mistakenly

blame the instruments for an

actual equipment failure,

the situation is ripe

for a

major accident.

It is

spectacularly easy to find

examples of false assessment in

industrial accidents.

Analysts

come in well after the

fact, knowing what actually

did happen; with

hindsight,

it is almost impossible to understand

how the people involved

could have

made the

mistake. But from the

point of view of the person

making decisions at

time,

the

sequence of events is quite

natural.

Three

Mile Island Nuclear Power

Plant

At the

Three Mile Island nuclear

power plant, operators pushed a

button to close a

valve;

the valve had been opened

(properly) to allow excess

water to escape from

the

nuclear

core. In fact, the valve

was deficient, so it didn't close.

But a light on the

control

panel indicated that the

valve position was closed.

The light actually

didn't

monitor

the valve, only the

electrical signal to the

valve, a fact known by

the

operators.

Still, why suspect a

problem? The operators did

look at the temperature

in

the

pipe leading from the

valve: it was high,

indicating that fluid was

still flowing

through

the closed valve. Ah,

but the operators knew

that the valve had been

leaky, so

the

leak would explain the

high temperature; but the

leak was known to be small,

and

operators

assumed that it wouldn't

affect the main operation.

They were wrong,

and

the

water that was able to

escape from the core added

significantly to the problems

of

that

nuclear disaster. Norman says

that the operators'

assessment was

perfectly

reasonable:

the fault wan is the

design of the lights and in

the equipment that

gave

false

evidence of a closed valve.

Lockheed

L-1011

Similarly

many airline accidents happened

just due to misinterpretations.

Consider

flight

crew of the Lockheed L-1011

flying from Miami, Florida,

to Nassau, Bahamas.

The

plane was over the

Atlantic Ocean, about 110

miles from Miami, when

the low

oil

pressure light for one of

the three engines went

on. The crew turned

off the engine

and

turned around to go back to

Miami. Eight minutes later,

the low-pressure

lights

for

the remaining two engines

also went on, and

the instruments showed zero

oil

pressure

and quantity in all three

engines. What did the

crew do now? They

didn't

believe

it! After all, the

pilot correctly said later,

the likelihood of simultaneous

oil

exhaustion

in all three engines was

"one in millions I would

think." At the time,

sitting

in the airplane, simultaneous

failure did seem most

unlikely. Even the

National

98

Human

Computer Interaction

(CS408)

VU

Transportation

Safety Board declared, "The

analysis of the situation by

the flight crew

was

logical, and was what most

pilots probably would have

done if confronted by

the

same

situation."

What

happened? The second and

third engines were indeed

out of oil, and they

failed.

So there

were no operating engines:

one had been turned off

when its gauge

registered

low,

the other two had

failed. The pilots prepared

the plane for an emergency

landing

on the

water. The pilots were

too busy to instruct the

flight crew properly, so

the

passengers

were not prepared. There

was semi-hysteria in the

passenger cabin. At

the

last

minute, just as the plane

was about to ditch in the

ocean, the pilots managed to

restart

the first engine and

land safely to Miami. Then

that engine failed at the

end of

the

runway.

Why

did all three engine

fail? Three missing O-rings,

one missing from each of

three

oil

plugs, allowed all the

oil to seep out. The

O-rings were put in by two

different

people

who worked on the three

engines (one for the

two plugs on the wings,

the

other of

the plug on the tail).

How did both workers

make the same mistake?

Because

the

normal method by which they

got the oil plugs

had been changed that day.

The

whole

tale is very instructive,

for there were four

major failures of different

sorts,

from

the omission of the O-rings,

to the inadequacy of the

maintenance procedures, to

the

false assessment of the

problem, to the poor

handling of the

passengers.

Fortunately

nobody was injured. The

analysts of the National Transportation

Safety

Board

got to write a fascinating

report.

Find an

explanation, and we are

happy. But our explanations

are based on analogy

with

past experience, experience

that may not apply in

the current situation. In

the

Three

Mile Island incident, past

experience with the leaky

valve explained away

the

discrepant

temperature reading; on the

flight from Miami to Nassau,

the pilots' lack of

experience

with simultaneous oil

pressure failure triggered

their belief that

the

instruments

must be faulty. Once we have an

explanation--correct or

incorrect--for

otherwise

discrepant or puzzling events,

there is no more puzzle, no

more

discrepancy.

As a result, we are complacent, at least

for a while.

How

people do things

To get

something done, you have to

start with some notion of

what is wanted--the

goal

that is to be achieved. Then,

you have to do some thing to

the world , that is,

take

action to

move yourself or manipulate

someone or something. Finally,

you check to

see

that your goal was made. So

there are four different

things to consider: the

goal,

what is

done to the world, the

world itself, and the

check of the world. The

action

itself

has two major aspects:

doing something and

checking. Call these

execution and

evaluation

Goals do not state precisely

what to do--where and how to

move, what to

pick

up. To lead to actions goals

must be transformed into specific

statements of what

is to be

done, statements that are

called intentions. A goal is

some thing to be

achieved,

often vaguely stated. An intention is

specific action taken to get

to the goal.

Yet

even intentions are not

specific enough to control

actions.

Suppose I

am sitting in my armchair, reading a

book. It is dust, and the

light has

gotten

dimmer and dimmer. I decide

to need more light (that is

the goal: get

more

light).

My goal has to be translated

into the intention that

states the appropriate

action

in the

world: push the switch

button on the lamp. There's

more: I need to specify

how

to move

my body, how to stretch to reach the

light switch, how to extend

my finger to

push the

button (without knocking

over the lamp). The

goal has to be translated

into

an

intention, which in turn has

to make into a specific

action sequence, one that

can

99

Human

Computer Interaction

(CS408)

VU

control

my muscles. Note that I

could satisfy my goal with

other action

sequences,

other

intentions. If some one

walked into the room

and passed by the lamp, I

might

alter my

intention form pushing the

switch button to asking the

other person to do it

for me.

The goal hasn't changed,

but the intention and

resulting action sequence

have.

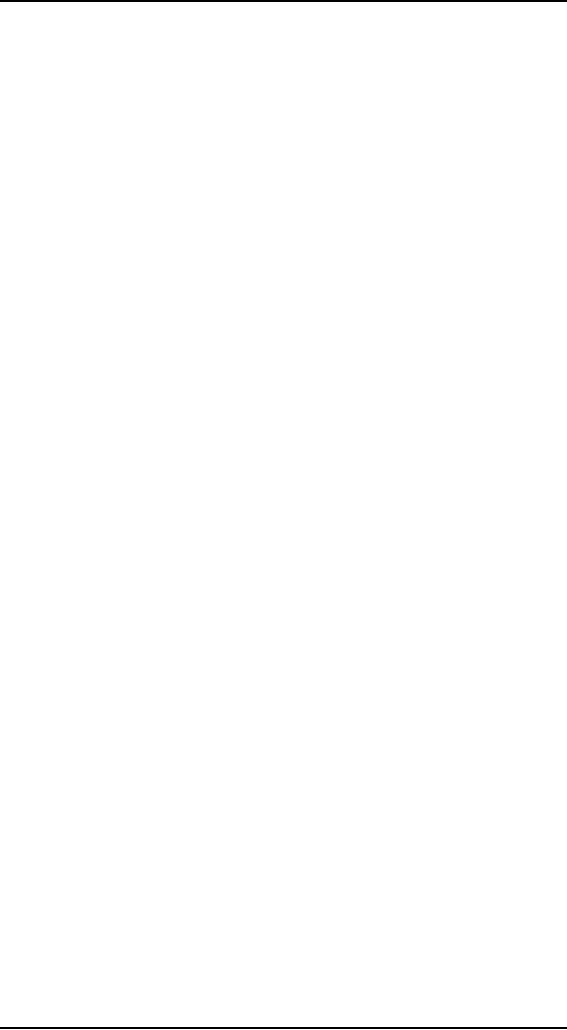

Action

Cycle

Human

action has two aspects,

execution

Goals

and

evaluation. Execution involves

doing

What we

want to

something.

Evaluation is the comparison

of

happen

what

happened in the world with

what we

wanted to

happen

Evaluation

Execution

to

Stages of

Execution

What we

do

Comparing

what

the

world

Happened

with what we

Start at

the top with the

goal, the state that

is

wanted to

happen

to be

achieved. The goal is

translated into an

intention

to do some action. The

intention

must be

translated into a set of

internal

commands, an

action sequence that can

be

THE

WORLD

performed

to satisfy the intention.

The

action

sequence is still a mental

event:

noting

happens until it is executed, performed

upon the world.

Stages of

Evaluation

Evaluation

starts with our perception

of the world. This

perception must then be

interpreted

according to our expectations

and then compared with

respect to both our

intentions

and our goals

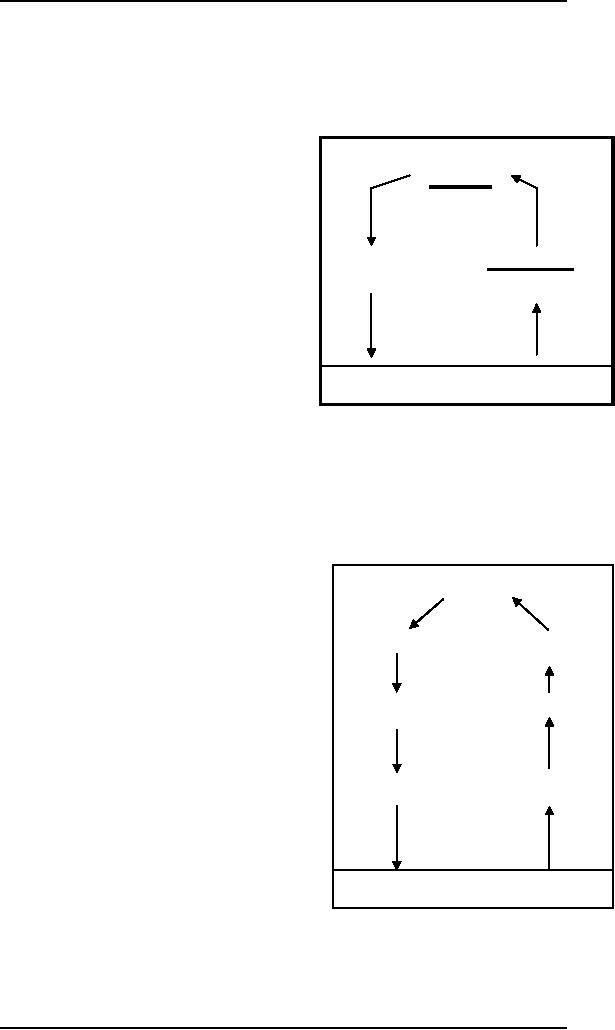

Seven

stages of action

Goals

Intention to

act

Evaluation of

the

Interpretations

The

stages of execution (intentions,

action

sequence,

and execution) are coupled

with

the

stages of evaluation

(perception,

sequence

of

Interpreting

the

interpretation,

and evaluation), with

goals

actions

perception

common to

both stages.

Errors

11.2

execution

of

Perceiving

the state

The

action sequence

of the

world

Human

capability for interpreting

and

manipulating

information

is

quite

impressive.

However, we do make

mistake.

Whenever

we try to learn a new skill,

be it

skiing,

typing, cooking or playing

chess, we

are bound

to make mistakes. Some

are

THE

WORLD

trivial,

resulting in no more than

temporary

inconvenience

or annoyance. Other may be

more serious, requiring substantial

effort

to

correct. In most situations it is not

such a bad thing because

the feedback from

making

errors can help us to learn

and understand an activity.

When learning to use

a

computer

system, however, learners

are often frightened of

making errors because,

as

100

Human

Computer Interaction

(CS408)

VU

well as

making them feel stupid,

they think it can result in

catastrophe. Hence, the

anticipation

of making an error and its

consequences can hinder a

user's interaction

with a

system.

Why do we

make mistakes and can we

avoid them? In order to answer the

latter part

of the

question we must first look at

what is going on when we

make an error. There

are

several different types of

errors. Some errors result

from changes in the context

of

skilled

behavior. If a pattern of behavior

has become automatic and we

change some

aspect of

it, the more familiar

pattern may break through

and cause an error. A

familiar

example of this is where we

intend to stop at the shop on the

way home from

work

but in fact drive past.

Here, the activity of

driving home is the more

familiar and

overrides

the less familiar

intention.

Other

errors result from an incorrect

understanding, or model, of a situation

or system.

People

build their own theories to

understand the casual

behavior of systems.

These

have been

termed mental models. They

have a number of characteristics.

Mental

models

are often partial: the

person does not have a full

understanding of the

working

of the

whole system. They are

unstable and are subject to

change. They can be

internally

inconsistent, since the person may

not have worked through

the logical

consequences

of their beliefs. They are

often unscientific and may

be based on

superstition

rather than evidence. Often

they are based on an incorrect

interpretation

of the

evidence.

A

classification of errors

There

are various types of errors.

Norman has categorized them

into two main

types,

slips

and mistakes:

Mistakes

Mistakes

occur through conscious deliberation. An

incorrect action is taken

based on

an

incorrect decision. For

example, trying to throw the

icon of the hard disk

into the

wastebasket, in

the desktop metaphor, as a

way of removing all existing

files from the

disk is a

mistake. A menu option to

erase the disk is

appropriate action.

Slips

Slips

are unintentional. They

happen by accident, such as

making typos by pressing

the

wrong key or selecting wrong

menu item by overshooting.

The most frequent

errors

are slips, especially in well-learned

behavior.

101

Table of Contents:

- RIDDLES FOR THE INFORMATION AGE, ROLE OF HCI

- DEFINITION OF HCI, REASONS OF NON-BRIGHT ASPECTS, SOFTWARE APARTHEID

- AN INDUSTRY IN DENIAL, SUCCESS CRITERIA IN THE NEW ECONOMY

- GOALS & EVOLUTION OF HUMAN COMPUTER INTERACTION

- DISCIPLINE OF HUMAN COMPUTER INTERACTION

- COGNITIVE FRAMEWORKS: MODES OF COGNITION, HUMAN PROCESSOR MODEL, GOMS

- HUMAN INPUT-OUTPUT CHANNELS, VISUAL PERCEPTION

- COLOR THEORY, STEREOPSIS, READING, HEARING, TOUCH, MOVEMENT

- COGNITIVE PROCESS: ATTENTION, MEMORY, REVISED MEMORY MODEL

- COGNITIVE PROCESSES: LEARNING, READING, SPEAKING, LISTENING, PROBLEM SOLVING, PLANNING, REASONING, DECISION-MAKING

- THE PSYCHOLOGY OF ACTIONS: MENTAL MODEL, ERRORS

- DESIGN PRINCIPLES:

- THE COMPUTER: INPUT DEVICES, TEXT ENTRY DEVICES, POSITIONING, POINTING AND DRAWING

- INTERACTION: THE TERMS OF INTERACTION, DONALD NORMAN’S MODEL

- INTERACTION PARADIGMS: THE WIMP INTERFACES, INTERACTION PARADIGMS

- HCI PROCESS AND MODELS

- HCI PROCESS AND METHODOLOGIES: LIFECYCLE MODELS IN HCI

- GOAL-DIRECTED DESIGN METHODOLOGIES: A PROCESS OVERVIEW, TYPES OF USERS

- USER RESEARCH: TYPES OF QUALITATIVE RESEARCH, ETHNOGRAPHIC INTERVIEWS

- USER-CENTERED APPROACH, ETHNOGRAPHY FRAMEWORK

- USER RESEARCH IN DEPTH

- USER MODELING: PERSONAS, GOALS, CONSTRUCTING PERSONAS

- REQUIREMENTS: NARRATIVE AS A DESIGN TOOL, ENVISIONING SOLUTIONS WITH PERSONA-BASED DESIGN

- FRAMEWORK AND REFINEMENTS: DEFINING THE INTERACTION FRAMEWORK, PROTOTYPING

- DESIGN SYNTHESIS: INTERACTION DESIGN PRINCIPLES, PATTERNS, IMPERATIVES

- BEHAVIOR & FORM: SOFTWARE POSTURE, POSTURES FOR THE DESKTOP

- POSTURES FOR THE WEB, WEB PORTALS, POSTURES FOR OTHER PLATFORMS, FLOW AND TRANSPARENCY, ORCHESTRATION

- BEHAVIOR & FORM: ELIMINATING EXCISE, NAVIGATION AND INFLECTION

- EVALUATION PARADIGMS AND TECHNIQUES

- DECIDE: A FRAMEWORK TO GUIDE EVALUATION

- EVALUATION

- EVALUATION: SCENE FROM A MALL, WEB NAVIGATION

- EVALUATION: TRY THE TRUNK TEST

- EVALUATION – PART VI

- THE RELATIONSHIP BETWEEN EVALUATION AND USABILITY

- BEHAVIOR & FORM: UNDERSTANDING UNDO, TYPES AND VARIANTS, INCREMENTAL AND PROCEDURAL ACTIONS

- UNIFIED DOCUMENT MANAGEMENT, CREATING A MILESTONE COPY OF THE DOCUMENT

- DESIGNING LOOK AND FEEL, PRINCIPLES OF VISUAL INTERFACE DESIGN

- PRINCIPLES OF VISUAL INFORMATION DESIGN, USE OF TEXT AND COLOR IN VISUAL INTERFACES

- OBSERVING USER: WHAT AND WHEN HOW TO OBSERVE, DATA COLLECTION

- ASKING USERS: INTERVIEWS, QUESTIONNAIRES, WALKTHROUGHS

- COMMUNICATING USERS: ELIMINATING ERRORS, POSITIVE FEEDBACK, NOTIFYING AND CONFIRMING

- INFORMATION RETRIEVAL: AUDIBLE FEEDBACK, OTHER COMMUNICATION WITH USERS, IMPROVING DATA RETRIEVAL

- EMERGING PARADIGMS, ACCESSIBILITY

- WEARABLE COMPUTING, TANGIBLE BITS, ATTENTIVE ENVIRONMENTS